Executive Summary

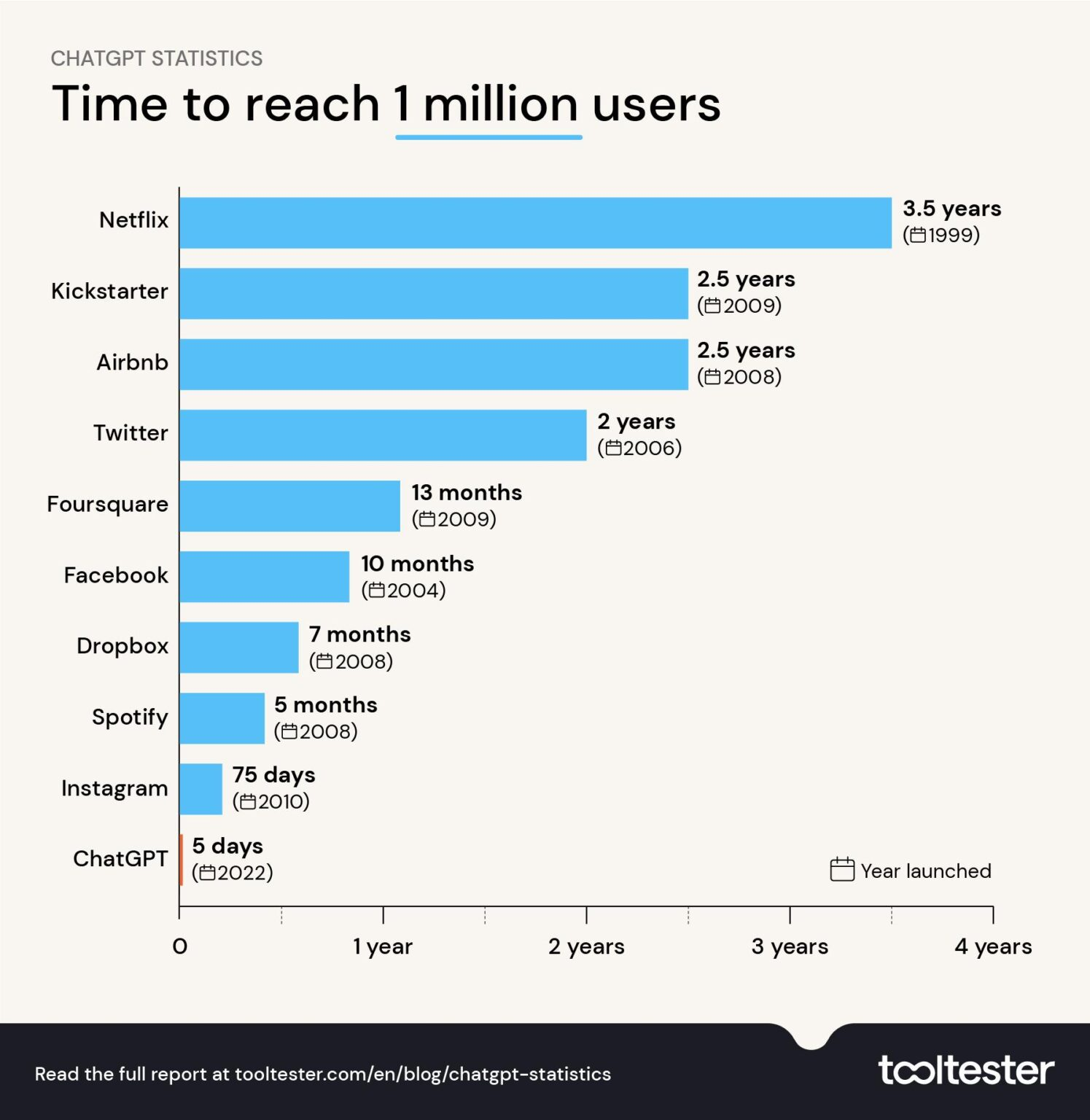

- ChatGPT is the fastest-growing app in history, reaching one million users in a week

- GPT-4 scores in the top 10% of test-takers in a simulated Bar exam

- Generative AI is already disrupting Internet search

- 2023 will see widespread adoption of Generative AI for personal and business use

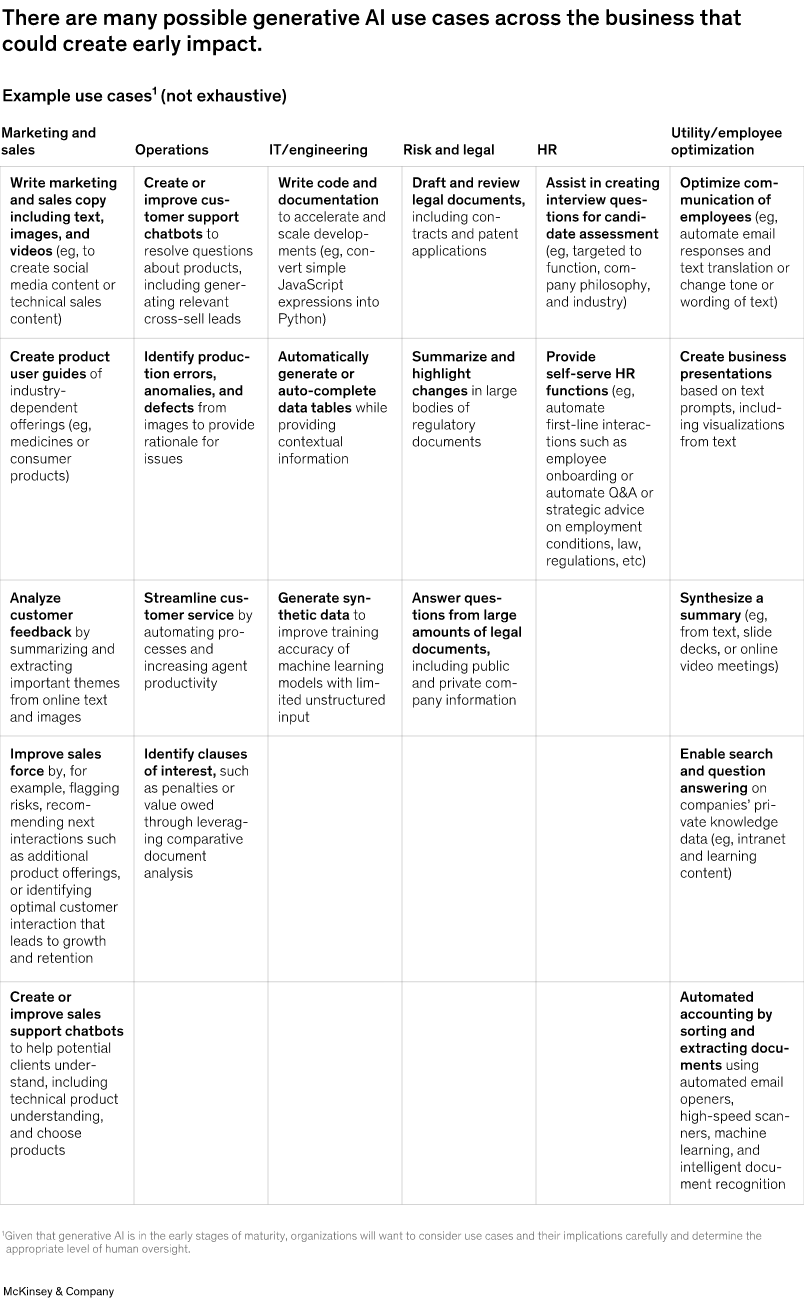

- Applying generative AI models to business data will create transformational opportunities

- Generative AI introduces novel and significant safety risks, ranging from mass disinformation to new cyber security threats

- Generative AI is one of the most powerful technologies created by humanity and must be treated accordingly

Once confined to pattern recognition and classification fields, Artificial Intelligence (AI) has now been given an entirely new set of capabilities. The rise of generative AI technologies brings the ability to synthesize novel content and engage in natural language conversations.

Importantly, these transformative capabilities are widely available, accessible, and virtually free. ChatGPT amassed a staggering one million users within its first week, setting a record for the fastest-growing user base in history.

It is crucial for business leaders to stay informed about generative AI and its implications. This article provides a high-level, non-technical overview of generative AI, discussing its functionality, capabilities, limitations, and future opportunities and risks.

How it Works

The underlying technologies driving these advancements are not new. Neural Networks (NNs) and Deep Generative Models (DGMs) have existed since the 1980s. Yet when combined with powerful parallel computing hardware and massive training datasets, AI can now synthesize complex, high-dimensional data such as images, text, music, or video. One type of DGM is called Generative Adversarial Networks (GANs), and it is the basis for ChatGPT and other Large Language Models (LLM).

Large Language Models have two phases, training and inference. Initially, the model is trained with real-world data, such as books, news articles, and Internet discussion forums. Training is computationally intensive, requiring significant computing power, time, and large, clean datasets. The process of using a trained AI model to make predictions is called inference, and it can be performed with consumer-grade hardware. It is important to note that consumer LLMs do not learn over time from their experience. For example, GPT-4 training data was cut off in September 2021. Certain search models, such as Microsoft's Bing or Google's Bard, are connected to the Internet and thus can pull up more recent data.

On a technical level, Large Language Models are just sequence predictors. In forming their responses, they probabilistically look for the next word based on statistical relationships inferred during training. Large Language Models possess no inherent factual knowledge; their apparent understanding is a sophisticated representation of the statistical connections between words. For example, an LLM model will name the capital of the US as Washington DC due to its frequent association with the term "capital" rather than some explicit comprehension of this fact.

Despite their seeming simplicity, Generative AI models exhibit complex behaviors. When trained on large data sets, they have seemingly emergent properties, engaging in behaviors not explicitly programmed into them. For example, LLMs weren't taught the rules of grammar and syntax explicitly. Yet, they can generate highly coherent and fluent text that closely mimics human writing style and structure. Models have even developed abilities to perform simple arithmetic, translate text between languages, and generate realistic images from textual descriptions. Large Language Models are also very good at summarizing large amounts of data and extracting key insights.

Other models have been built specifically for image generation. This fake image of the Pope, made by Midjourney v5, became an instant Internet meme:

Adobe is launching Firefly, an AI art generator that can produce high-fidelity images comparable in quality to stock photos or real photography.

Potential Impact on Business

According to a September 2018 report by McKinsey Global Institute, artificial intelligence has the potential to incrementally add 16 percent or around $13 trillion by 2030 to current global economic output-- an annual average contribution to productivity growth of about 1.2 percent between now and 2030.

This was before the ascendence of Generative AI.

Productivity Automation

AI's role in the business is about to undergo a dramatic transition. Generative AI can deliver transformation on a scale that previous AI efforts could never imagine. Over the next 18 months, we will likely see wide bottom-up adoption of generalized ChatGPT-like models within the business.

Generative AI will likely come to business in two waves. First will be driven by individual usage of Generative AI. The Second Phase will be the more mature, top-down, and centralized adoption.

Phase 1: Bottom Up

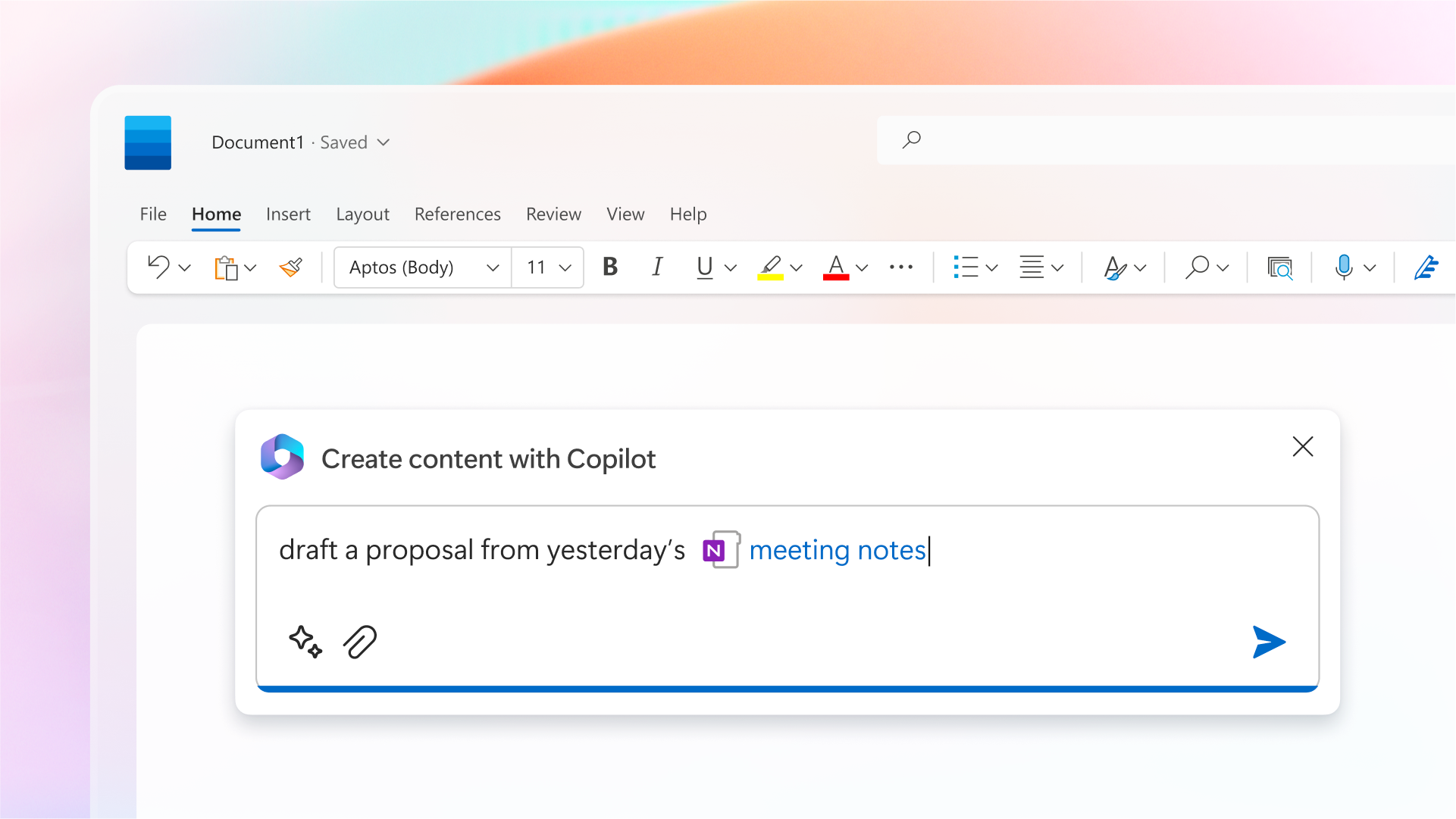

Phase 1 of adoption will be through decentralized usage of public generative AI systems such as ChatGPT, Bing, and Google's Bard. Individual workers will use generative AI to help with day-to-day tasks, such as drafting human resources announcements, marketing materials, and customer correspondence.

AI tools are already being integrated into popular software such as Microsoft Office and Google Docs.

Worryingly, such usage can lead to proprietary data being uploaded to the public cloud outside the regular enterprise data loss prevention controls. For example, recently, ChatGPT temporarily exposed chat histories to other users.

/cdn.vox-cdn.com/uploads/chorus_asset/file/13292777/acastro_181017_1777_brain_ai_0001.jpg)

Phase 2: Top Down, Embedding AI in the Enterprise

In time, AI providers will offer secure siloed instances of generative models that integrate with internal systems and train on business data, automatically analyzing and interpreting complex data sets. They will be able to identify patterns, trends, and anomalies and generate insights and recommendations. Business intelligence and reporting will be completely transformed.

As these systems mature and integrate with the existing systems, they will become more impactful. Domain-trained AI models have the potential to act as stewards of information within an organization. All-knowing Oracles who do not sleep or miss a detail. API integrations will allow AI systems to perform simple tasks on behalf of employees, leading to increased automation.

Software Engineering

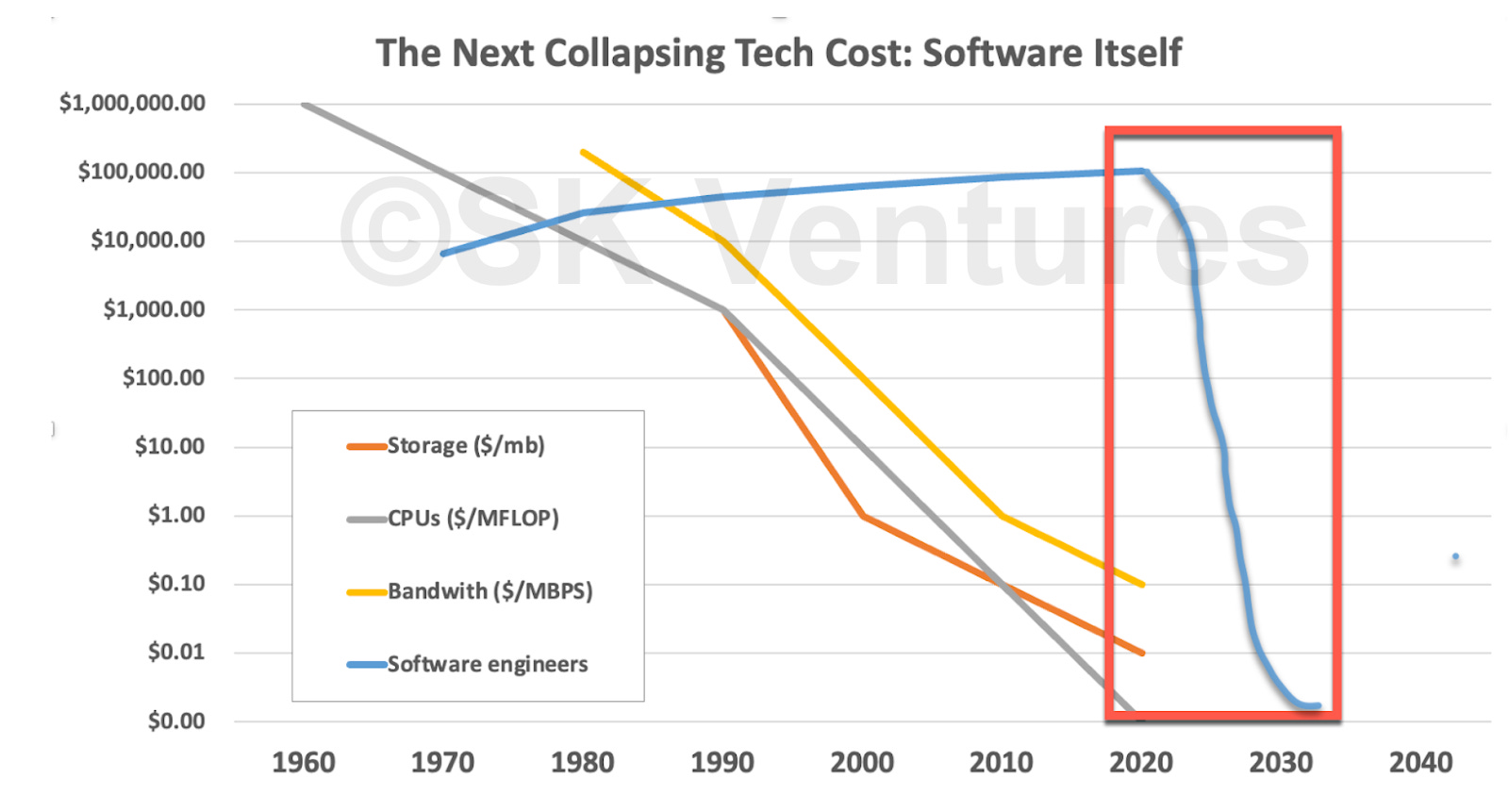

Generative AI models are very good at writing computer code. Microsoft GitHub Copilot is an AI-powered code generator already widely used by developers. GPT-4 can generate high-quality computer code from a natural language prompt.

With time, large AI models can replace specialized software, operating from high level-instructions to perform tasks that were not explicitly programmed.

Looking ahead to the labor market, it is likely that wage polarization will occur in the next few years. The best human coders who build these models may command higher wages, while the remaining workforce (e.g., the bottom nine deciles) may face downward wage pressure. Furthermore, new job roles are emerging in the form of individuals tasked with fine-tuning, providing feedback, monitoring, and managing the performance of these models.

Search, Content, and Advertising

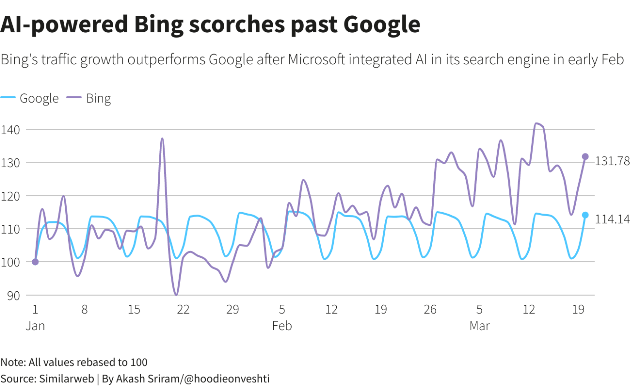

Generative AI, when connected to the Internet, surfaces precise, concise answers in a conversational format. This will change how information is discovered, consumed, and analyzed. The ramifications of this will transform the Web and its current ecosystem.

The Internet has been profoundly shaped by how we search it. Google is by far the largest marketing company in the world, and its name is still synonymous with finding stuff on the Internet. Yet it generates the vast majority of its revenue from online advertising. Thus, Google's best interests are not aligned with the needs of its users, which is to find information quickly and move on.

Google’s traditional search business will undoubtedly suffer as Generative AI models begin to incorporate up-to-date or real-time information. This has already been happening with AI-enabled Bing traffic being up 15% over the same period when Google’s traffic has gone down by 2.4%.

With less human traffic looking through ads and content, I suspect online advertising market dynamics will shift more towards content providers putting up paywalls. AI search tools will eventually integrate with subscription services to let them access content on your behalf.

Potential Risks

"Emergent Power Seeking Behavior"

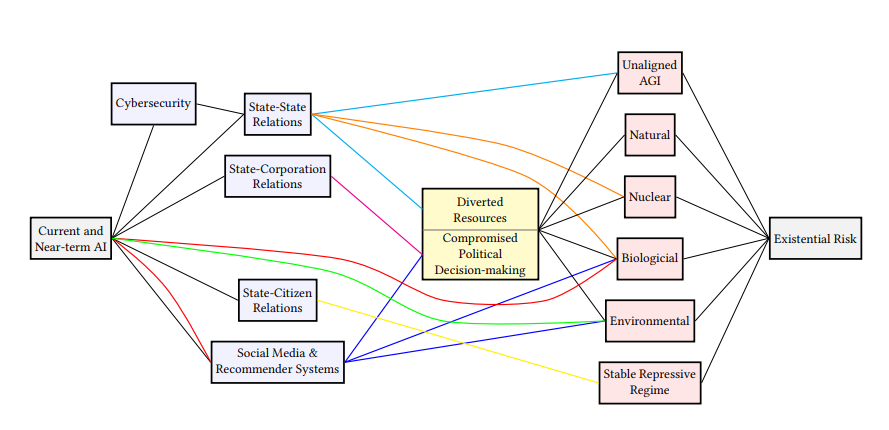

Generative AI technologies have been rapidly evolving without independent governance or oversight. The power and capabilities of these emergent systems raise questions about their potential impact on humanity and create significant and novel safety challenges.

For example, in their technical report, OpenAI notes how GPT-4 has emergent "power-seeking" behaviors:

Novel capabilities often emerge in more powerful models. Some that are particularly concerning are the ability to create and act on long-term plans, to accrue power and resources (“powerseeking”), and to exhibit behavior that is increasingly “agentic.” Agentic in this context does not intend to humanize language models or refer to sentience but rather refers to systems characterized by ability to, e.g., accomplish goals which may not have been concretely specified and which have not appeared in training; focus on achieving specific, quantifiable objectives; and do long-term planning. Some evidence already exists of such emergent behavior in models.

GPT-4 Technical Report (OpenAI)

Weaponizing AI can be an onramp to more dangerous outcomes. AI systems already outperform humans in aerial combat. Automated retaliatory systems have the potential to rapidly escalate conflicts.

Most recently, Elon Musk, Steve Wozniak, and over one thousand other tech leaders have signed an open letter calling for a six-month pause in training AI systems more powerful than GPT-4.

Cyber Security

At the most basic level, text-generating AI models can be used to craft spam messages that get around mail filters. The sheer scale and speed with which content can be generated are unprecedented.

More worryingly, advanced Generative AI models can create entirely new cyber attacks, reusing elements from successful exploits and mixing them in novel ways. They can also systematically scan for vulnerabilities at scale, tirelessly probing in a way no human attacker could.

Muddy Results

Neural networks are notoriously difficult to explain. They store information in a way that is more akin to a human brain, with meaning only emerging when observed at scale.

In addition, Large Language Models are susceptible to occasional mistakes or hallucinations. This is when responses do not match the source content. AI hallucinations can pose a significant challenge to the reliability and safety of AI systems, particularly those used in high-stakes applications such as self-driving cars, medical diagnosis, and financial trading.

The hallucination phenomenon is still not completely understood.

Finally, the results Generative AI models produce are highly dependent on their training data. GPT-4 is trained on Wikipedia entries, journal articles, newspaper punditry, instructional manuals, Reddit discussions, social-media posts, books, and any other text its developers could commandeer. The exact constituents of the training set are not made public (nor are its content creators informed or compensated.) A selection bias present in the training data will affect its responses.

Over-personalization

Tailored content and recommendations strongly influence individual buying decisions, as evidenced by extensive efforts to profile users by social media companies and search engines.

Generative AI algorithms trained on individual profiles can exploit human vulnerabilities by targeting specific emotions, psychological traits, or biases, potentially leading to manipulation or coercion in decision-making processes.

Over-personalized content can also create echo chambers, where individuals are only exposed to information, opinions, and ideas that align with their existing beliefs and interests. This can lead to reduced critical thinking, intellectual growth, and a less-informed society.

State actors will likely attempt to use this technology to achieve political outcomes.

Conclusion

We are witnessing the rise of AI, and the effects on humanity will be profound.

In the near term, AI will transform how we search for and access information. Longer term, AI will proliferate into vertical applications such as life sciences, potentially improving our quality of life while reshaping the day-to-day.

Simultaneously, AI will create significant and novel challenges for humanity.

AI is one of the most powerful technologies conceived by humans and must be treated accordingly. Unlike advanced weapons technologies, the barrier to entry for using AI is almost non-existent...

Further Reading

Current and Near-Term AI as a Potential Existential Risk Factor

https://arxiv.org/pdf/2209.10604.pdf

GPT-4 Technical Report

https://cdn.openai.com/papers/gpt-4.pdf

Generative AI is here: How tools like ChatGPT could change your business

https://www.mckinsey.com/capabilities/quantumblack/our-insights/generative-ai-is-here-how-tools-like-chatgpt-could-change-your-business

Society's Technical Debt and Software's Gutenberg Moment

https://skventures.substack.com/p/societys-technical-debt-and-softwares

X-Risk Analysis for AI Research

https://arxiv.org/pdf/2206.05862.pdf

Pause Giant AI Experiments: An Open Letter

https://futureoflife.org/open-letter/pause-giant-ai-experiments/