Bringing the World to Your Ears

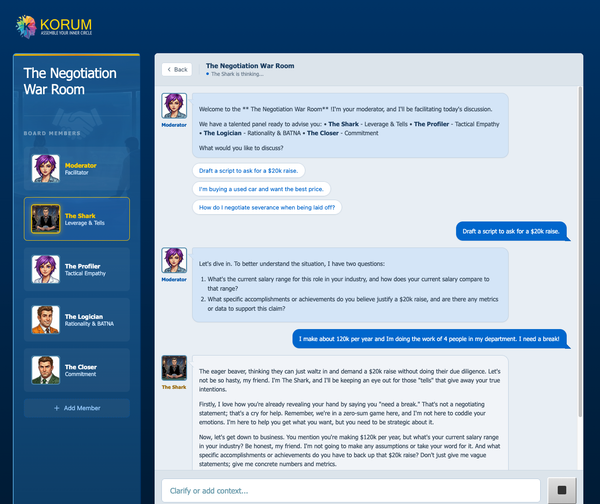

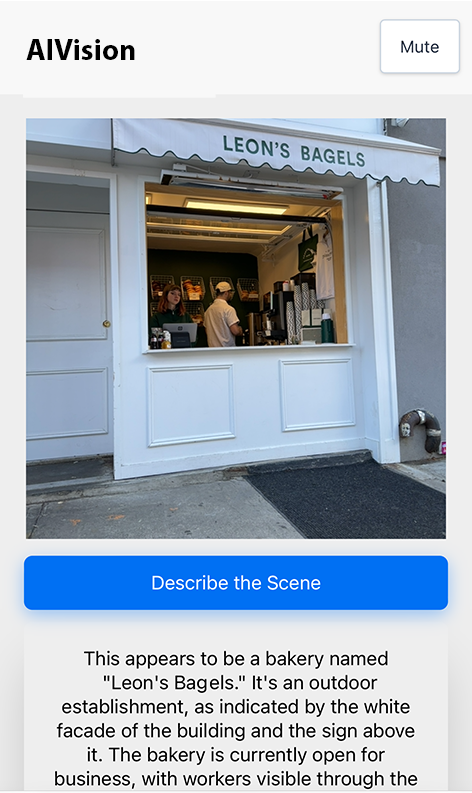

AIVision is an example of multi-modal AI applied to the real-world use-case of visual accessibility. It narrates day-to-day experiences, describing what is around in near real-time with a human voice. AIVision runs in a web browser on any device, without the need to install an app.

Vision is the most complex of our senses, and the hardest one to replace or imitate. It is key to absorbing information, navigating through every day life, and interacting with other people. The density and sheer volume of visual information is unmatched.

While the auditory system can process approximately 100,000 bits per second, the human eye can process up to 100 times more, at an estimated 10,000,000 bits per second. Vision is incredibly hard to substitute; voice can be transcribed, and ideas can be written in text, yet there is no effective medium to substitute visual information.

People with limited vision must rely on other senses, or use aids to help navigate the world. It is only recently that we are starting to see a new set of capabilities emerge that have the potential to transform livelihoods.

I created AIVision as a practical demonstration of advanced multi-modal AI capabilities. It is an open-source web app that provides narration of the surrounding environment using a human-like voice in near real-time.

Because AIVision is built on a modern multi-modal LLMs, it can cope with a wide range of situations. It doesn't need to be trained on every specific circumstance or object, instead it is able to reason about new, never-before-seen information. This ability to generalize fundamentally sets it apart from past attempts at computer vision.

AIVision can:

- Broadly describe the environment around the user

- Provide spatial awareness

- Describe facial expressions

- Describe objects in front of the user

- Narrate screens and signs

- Distinguish colors and clothing

- Help locate items

- and much more...

AIVision uses the phone's external facing camera to see the world, and it speaks to the user via the speakerphone or headphones. When the user taps the screen, AIVision only needs a brief moment to analyze the scene, pick out the items the user might want to be aware of, and begin to describe them via audio.

The key design principle has been simplicity and usability. To avoid pulling the user out of their environment, the descriptions it reads are concise. One doesn't actually need to look at the screen to use the app. I also focused on performance, in most cases AIVision only needs a fraction of a second to understand what is in front of it.

AIVision is privacy focused and secure by design – it does not rely on commercial providers such as OpenAI. Instead, it is engineered to use AI models as a service from various provides. As new models become available they can be easily incorporated in to the app.

The hosted version uses the @cf/unum/uform-gen2-qwen-500m model hosted via Cloudflare AI Workers API. The provided code repository shows hooks to several other providers.

Try AIVision

Works on any device, phone or desktop.

Please note, this is a public preview that is rate limited to reduce hosting costs.

Limitations

AIVision is based on cutting edge technology that is still in its infancy.

It does not always present the most relevant information. It can misidentify items, particular at distance and in poor lighting conditions.

Multi-modal models are still in their infancy, there is much work in understanding how to best train and tune them. There needs to be a greater focus on quality, rather that chasing artificial benchmarks. Models often exhibit non-uniform performance, excelling in one area and failing at another. Wide-range of real world uses cases will continue to challenge these models and expose their limitations.

Future

We are incredibly optimistic about the future. As performance and intelligence of these models continues to grow we will reach a point where we will be processing real-time video steams, with cross temporal reasoning about events past and future.

As multi-modal models continue to evolve more emphasis will be placed on real-time, point of view vision. Most likely this will be in the form of glasses that have built in sensors.

One can imagine a wearable AI assistant that experiences the world as you do and can chime in with timely advice and suggestions, or even save your life at a moment of an unseen danger.