Are Humanoid Robots the Answer to the Global Worker Shortage?

A perfect storm of AI advancement, deglobalization, & an aging workforce creates a critical worker shortage. Humanoid robots are no longer sci-fi, but an essential solution to this unprecedented economic challenge. I examine the leading contenders in the field and their cutting-edge technology.

We're witnessing a profound system-level shift, driven by the convergence of three exponential megatrends:

- Technological Explosion: The startling, non-linear acceleration in artificial intelligence, particularly with the rise of generative AI, is fundamentally changing the cost of prediction and cognition.

- Deglobalization: The era of hyper-globalization is unwinding, replaced by a multi-polar world defined by strategic competition and the re-shoring of critical supply chains.

- The Great Demographic Reversal: Aging populations and shifting labor dynamics across the planet are upending the economic assumptions of the past 40 years.

The combinatorial effect of these forces is creating the economic and strategic imperatives for a new automation paradigm. This isn't a linear progression; it's a phase transition.

For half a century, industrial robots have been physically powerful but intellectually brittle, confined to the predictable cages of a production line where they perform a single, repetitive task. The recent breakthroughs in generative AI are fundamentally changing this. We are now shifting from programmed automation to general-purpose, embodied intelligence—machines that can reason about the physical world and adapt to its inherent unpredictability.

But this technological leap is not happening in a vacuum. It is colliding with a demographic imperative that makes its deployment an economic necessity: the "Silver Tsunami."

The sheer scale of population aging is difficult to overstate. By 2030, one in six people on the planet will be over 60. In the US, the cohort aged 65 or older is projected to grow by nearly 50% by 2050. This isn't an abstract future; it's happening now. In the U.S. alone, 10,000 Baby Boomers reach retirement age every day, a trend draining decades of accumulated knowledge from the workforce. (Source: randstad)

The consequence is an unprecedented structural shortfall in our labor force, with projections showing a gap of some 6 million workers in the U.S. before the end of this decade. This isn't a cyclical downturn that policy can easily fix; it's a permanent demographic reality.

It is this immense demand pressure that transforms humanoid robotics from a technological novelty into an essential component of our future economy. The forecasts are beginning to reflect this new reality. Morgan Stanley now projects the U.S. humanoid robot population could swell from 40,000 in 2030 to 63 million by 2050, representing a potential $3 trillion impact on wages.

The Shape of Robots

Humanoid startups are rising on a simple idea: build robots like us to fit into our world. With arms and legs, they could use our tools, open doors, and climb stairs—aiming for true general-purpose use. But biology is complex; even standing upright demands constant, difficult computation.

Contrast this with the humble Roomba, perhaps the most successful domestic robot to date. It represents the opposite philosophy: radically simplifying the problem by ignoring the human form entirely. Its disc shape is optimized for moving under furniture, its sensors for detecting edges. By narrowing its focus exclusively to cleaning floors, it achieves reliability and affordability at a scale of millions of homes.

| Specialized Robotics | Humanoids |

|---|---|

| ✅ Technology readily available; less mechanically and computationally intense | ✅ Capable of accomplishing complex tasks that require advanced dexterity and/or intelligence |

| ✅ Highly effective at simple, repetitive tasks that require limited dexterity | ✅ Limited need to modify existing workplace or methods; interchangeable with humans |

| ❌ Ineffective at tasks that require advanced dexterity and/or intelligence | ❌ Technology still in development; requires highly advanced AI and mechanical engineering |

| ❌ May require significant modifications to the workplace to accommodate | ❌ May require significant training / trial & error |

This isn't merely a design preference; it's a fundamental strategic choice. The humanoid approach is a bet on general intelligence, aiming for a single, adaptable platform for a vast array of tasks. The specialized approach, meanwhile, wins on efficiency and reliability for any single, well-defined problem.

What’s fascinating, and what accelerates this entire field, is that the traditional hardware challenges—advances in electric motors, battery technology, and manufacturing—are becoming increasingly commoditized.

The physical aspect of robotics has become a solved problem.

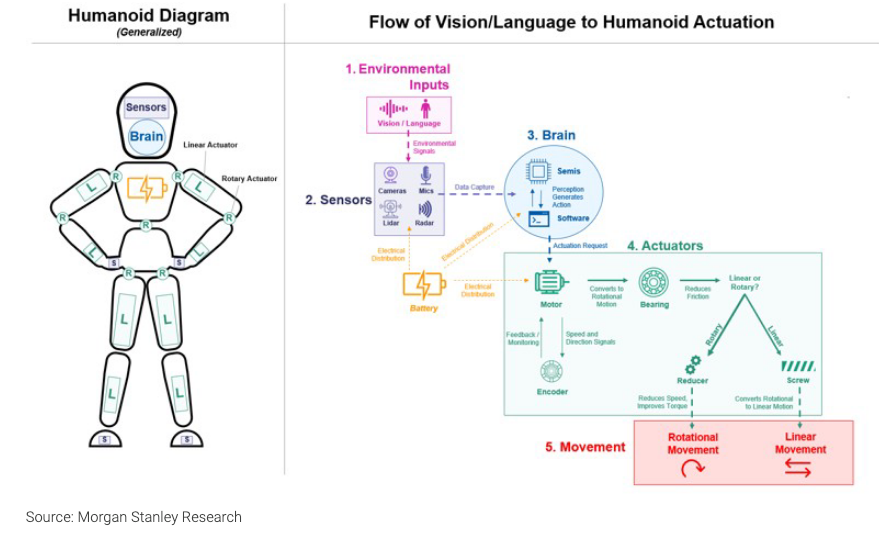

Anatomy of a General-Purpose Robot

An advanced humanoid robot is a sophisticated machine, often combining AI and robotics, that can perform complex tasks with a high degree of autonomy, adaptability, and precision. They are characterized by their ability to make intelligent decisions, interact with their environment, and learn from experience, often exceeding the capabilities of traditional robots.

| Trait | Description |

|---|---|

| Vision systems and LLMs | Enhances navigation and understanding |

| Dexterity and motion control | Enables human-like movement and precision |

| Autonomy and AI reasoning | Facilitates intelligent decision-making |

| Natural language interaction | Allows voice command understanding |

| Real-time feedback and sensing | Provides environmental awareness |

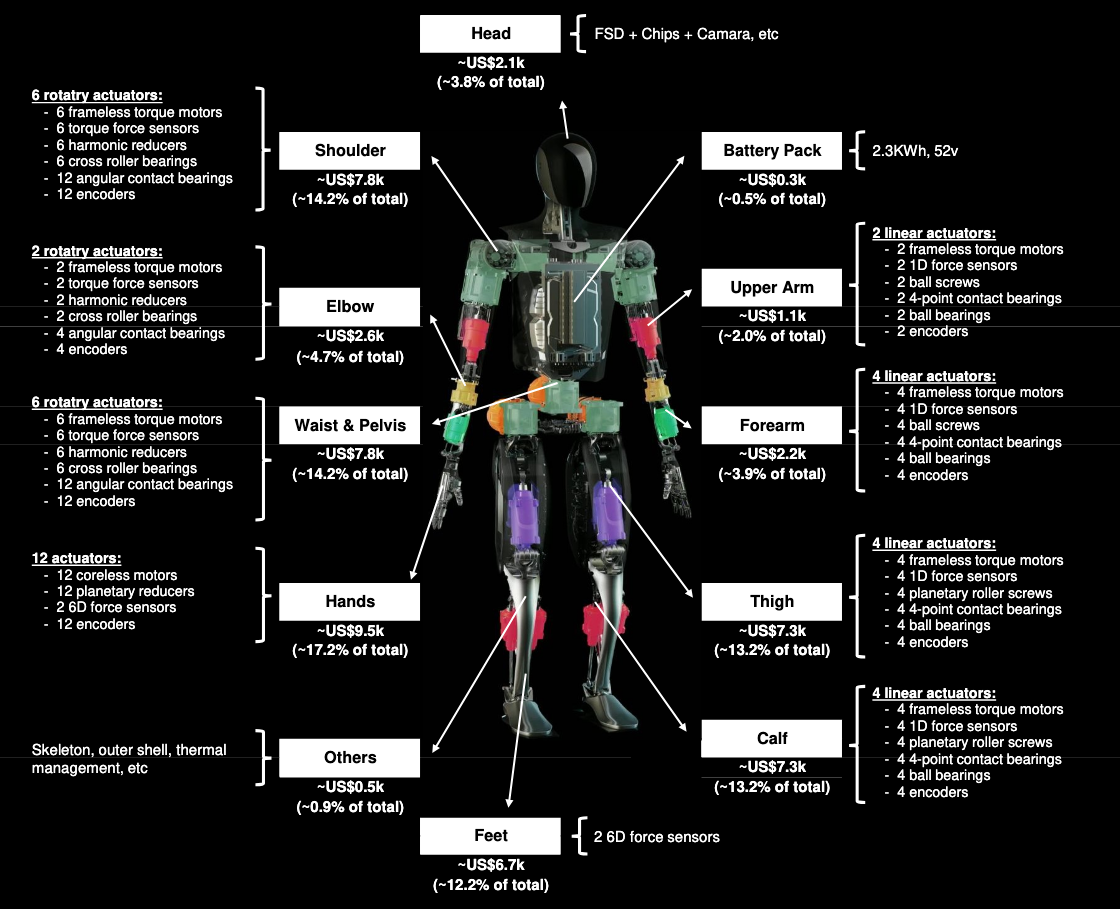

The cost of building humanoid robots has undergone a dramatic transformation, with hardware costs declining by approximately 40% in just one year and production costs dropping from an estimated $50,000-$250,000 range to between $30,000-$150,000. This unprecedented cost reduction is driven by multiple converging factors that are fundamentally changing the economics of robotics manufacturing.

The explosive growth of the drone and electric vehicle markets has created massive demand for high-performance electric motors, driving down unit costs through economies of scale. Extremely cheap, yet very power and torque dense electric motors from the RC airplane and drone markets can be repurposed for robotics applications at a fraction of traditional costs.

High-performance processors suitable for robotics applications have seen dramatic price reductions due to volume production for consumer markets. Edge computing capabilities that once required expensive workstations are now available in sub-$100 modules.

The sensor systems that enable humanoid perception and control, previously costing $5,000-$15,000 per unit, are benefiting from smartphone and automotive industry spillovers. High-precision IMUs, cameras, force sensors, and LiDAR units have seen dramatic price reductions as volumes increase across multiple industries.

Industrial robot component prices are experiencing sustained decline, with key components dropping approximately 1% annually starting in 2024. The average revenue per unit (ARPU) for industrial robots has fallen from $31,100 in 2018 to $25,600 in 2024—a 17.7% reduction. (Source: Manufacturing Automation Industrial Robot Components Report)

China controls approximately 63% of key companies in the global supply chain for humanoid robot components, enabling significantly lower production costs and faster time-to-market. The country dominates critical materials, controlling 90% of rare earth magnet production and 75% of global battery cell capacity.

Powering a Walking AI Supercomputer

| Technology | Energy Density | Safety | Cycle Life | Cost | Availability |

|---|---|---|---|---|---|

| Li-ion | 150-250 Wh/kg | Moderate | 500-1,500 | Low | High |

| LiFePO4 | 90-150 Wh/kg | High | 2,000-5,000 | Moderate | High |

| Semi-Solid | 200-330 Wh/kg | High | 800-1,500 | Moderate | Limited |

| Solid-State | 300-400+ Wh/kg | Very High | 1,000+ | High | Emerging |

Current lithium-ion systems typically provide 2-4 hours of operational runtime for humanoid robots. With humanoid robots modeled after an 80 kg adult consuming approximately 80 liters of internal volume, current LFP batteries provide only 150-200Wh/kg, while high-nickel ternary batteries reach 250-300Wh/kg, translating to merely 12-24kWh of capacity. This constraint becomes more severe as robots incorporate power-hungry AI chips and advanced computing systems.

Solid-state batteries represent the most promising advancement for humanoid robotics, offering energy densities of 300-400+Wh/kg compared to conventional lithium-ion systems. Companies like EVE Energy have begun mass production of all-solid-state batteries achieving energy densities up to 300Wh/kg or 700Wh/L.

UBTECH's Walker S2 is the world's first humanoid robot capable of autonomous battery swapping. The robot can sense low battery levels, navigate to charging stations, and complete battery exchanges in under three minutes without human intervention.

Applications

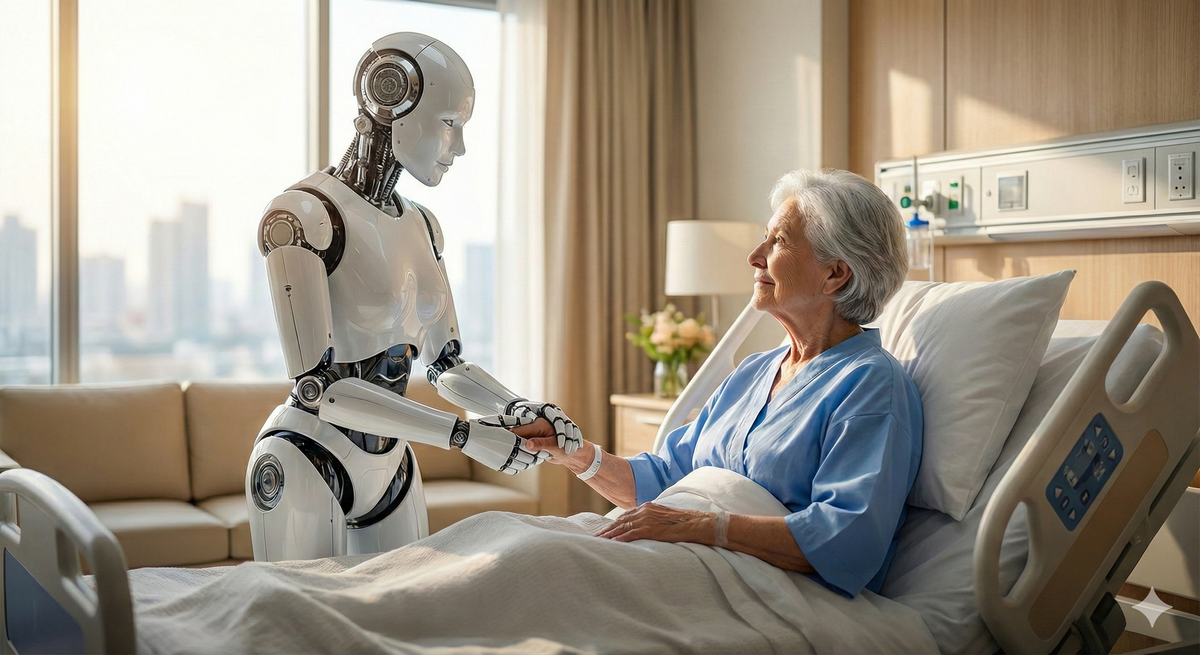

Advances in AI will directly expand the scope of tasks humanoid robots can perform. Initially, these general-purpose robots will be deployed in structured environments like manufacturing and logistics. As their autonomy increases, they will transition to more dynamic roles, working collaboratively alongside human teams.

| Sector | Roles |

|---|---|

| Agriculture | Planting, harvesting, crop management, precision seeding, irrigation management, crop health monitoring |

| Construction | Material handling, assembly, precise placement, tool use, site navigation |

| Disaster Response | Hazard entry, toxic material handling, firefighting, nuclear facility operations, extreme condition tasks |

| Household Tasks | Cleaning, cooking, household maintenance, companionship, autonomous assistance |

| Manufacturing | Assembly, welding, packaging, repetitive tasks, quality control, precision manufacturing |

| Patient Care & Assistance | Daily task support, medication reminders, physical therapy, vital sign measurement, communication |

| Security & Surveillance | Home monitoring, personal protection, security patrols |

| Surgical & Medical Support | Surgery assistance, rehabilitation therapy, treatment adherence, vital sign monitoring |

| Military & Defense | Border surveillance, bomb disposal, urban warfare, equipment transport |

| Material Transport | Heavy load handling, delicate material handling, package delivery, collaborative transport |

| Warehouse Operations | Putaway, picking, packing, shipping, warehouse navigation, irregular item handling |

Humanoid robots can operate continuously without breaks or shift changes, maximizing operational uptime and overall productivity. In the long term, this translates to a significant reduction in labor-related expenditures.

“We estimate humanoids have the potential to bring about cost savings of roughly $500,000 to $1 million per human worker over 20 years,”

Humanoid Robot Market Outlook, Morgan Stanley

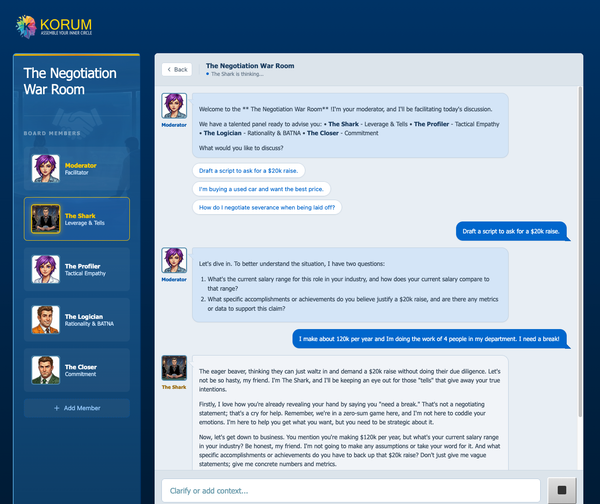

Artificial Intelligence

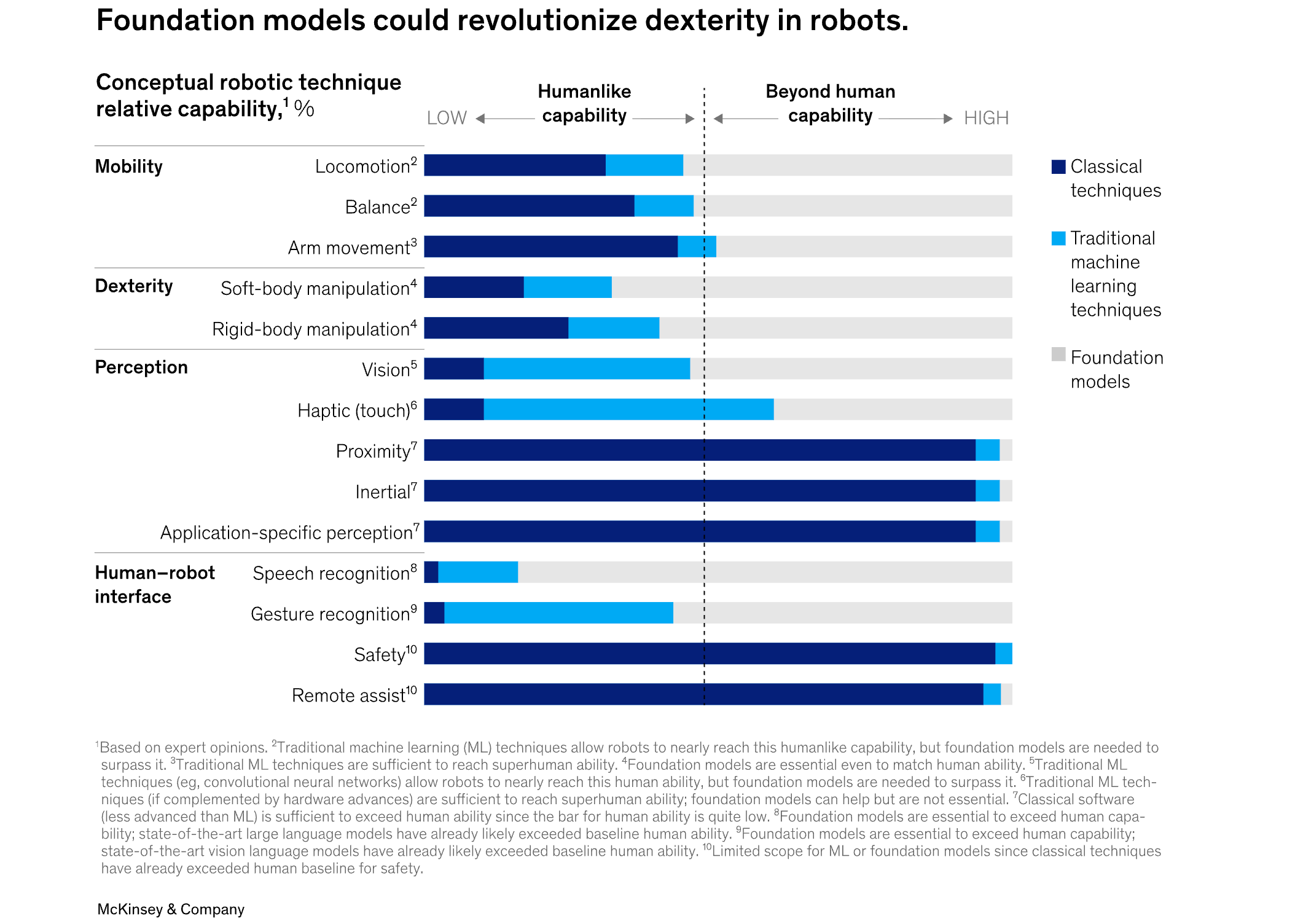

The central arena for competition in robotics is no longer about the hardware, but about the intelligence that animates it. The challenge is not which physical form will win, but which AI can most effectively bridge the gap between a machine's potential and the messy reality of our physical world.

The field of AI models for humanoid robots has undergone a revolutionary transformation in 2025, driven by the convergence of multi-modal large language models (LLMs) and vision-language models (VLMs). These advances are enabling humanoid robots to move beyond simple programmed behaviors toward general-purpose intelligence that can perceive, reason, and act in complex real-world environments.

The modern approach to robotic intelligence solves a critical bootstrap problem: a robot no longer needs to learn about the world from scratch. Instead, these new AI systems first inherit a broad semantic knowledge base from vision-language models pre-trained on the internet. This crucial first step gives them a human-like context for objects and concepts, which is then refined through fine-tuning on specialized robotic data.

This foundation enables a more sophisticated, modular design that is now becoming the industry standard. Inspired by the "thinking fast and slow" framework, these systems combine multiple specialized models. A large-scale VLM serves as the deliberative "System 2," responsible for high-level reasoning and planning. This, in turn, commands a nimble "System 1" transformer that executes the low-level, real-time motor control. Figure AI’s Helix system, with its distinct reasoning (S2) and action (S1) components, is a prime example of this, bifurcated approach.

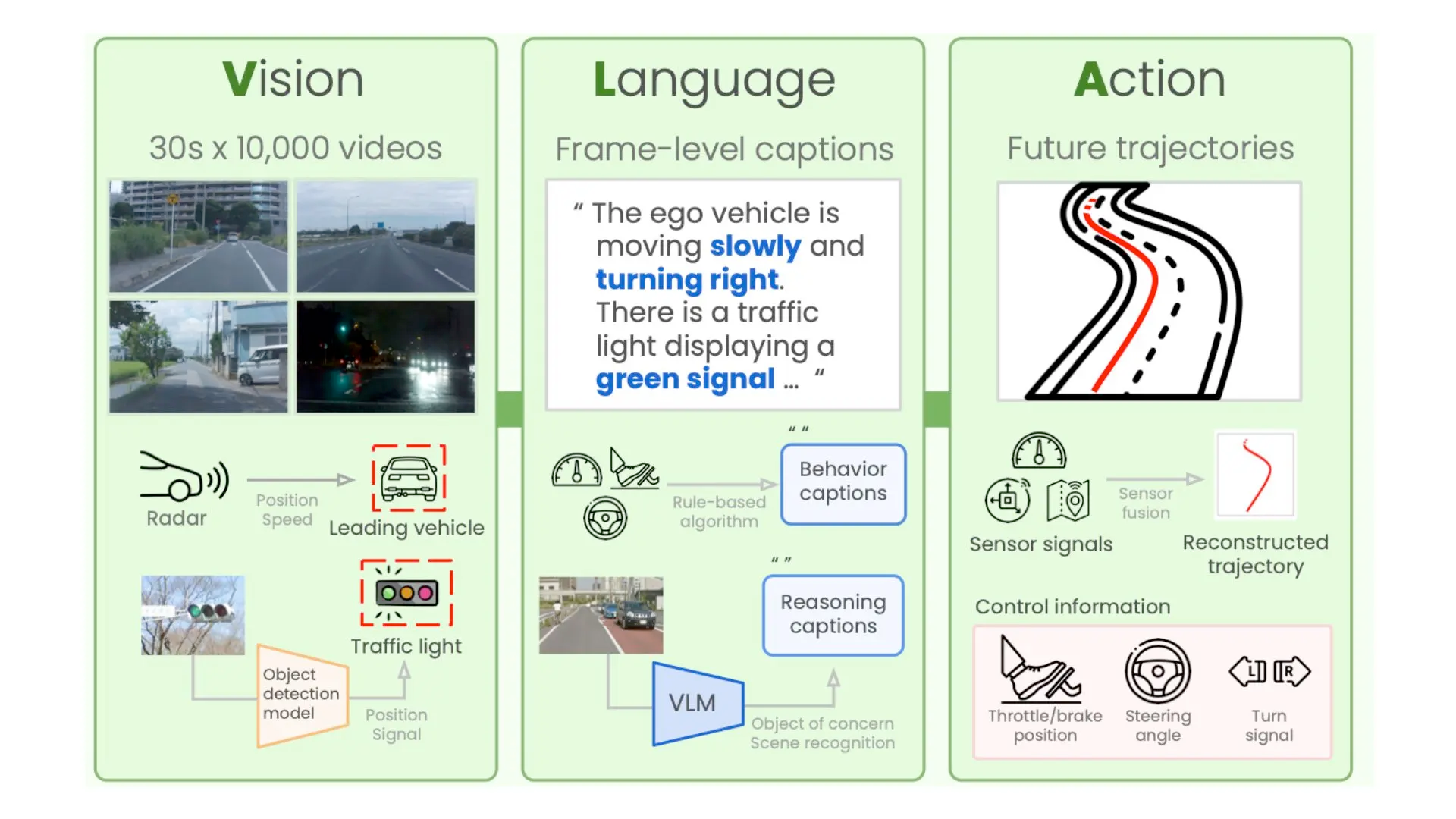

Vision-Language-Action (VLA) Models

Recent advancements in artificial intelligence are converging toward a new class of integrated systems known as Vision-Language-Action (VLA) models. These models represent a significant paradigm shift in robotics and autonomous systems, moving away from fragmented, task-specific modules toward unified, end-to-end architectures.

A VLA model is an all-in-one system designed to process and integrate three fundamental modalities:

- Vision: This modality involves the perception and interpretation of the environment through sensory data, typically from cameras or other sensors. VLA models can analyze complex visual scenes to identify objects, understand spatial relationships, and track dynamic changes in real-time.

- Language: This refers to the model's capacity for natural language understanding (NLU). It enables the system to interpret high-level commands provided by a human operator via text or voice, allowing for more intuitive and flexible human-robot interaction.

- Action: This is the model's output, encompassing the generation and execution of physical movements or digital tasks. Actions are not pre-programmed but are dynamically determined based on the synthesis of visual perception and language-based instructions.

The key innovation of VLA models lies in their integrated nature. Traditional robotic systems often employ a pipeline architecture, where separate components handle perception, planning, and control. This modular approach can introduce latency and complexity, making it difficult to learn and execute complex behaviors.

In contrast, VLA models function more like a biological brain, processing sensory input, language, and motor control concurrently within a single neural network. This end-to-end approach allows the model to learn direct mappings from perception to action, conditioned on a given instruction.

To illustrate this concept, consider a VLA-powered autonomous vehicle tasked with the command: “Drive to the grocery store.”

A traditional system might parse the command, consult a mapping service to generate a static route, and then use separate perception and control modules to follow that route.

A VLA model approaches the task holistically:

- It continuously processes the vision data stream, identifying traffic lights, road markings, other vehicles, and pedestrians.

- It uses the language instruction ("Drive to the grocery store") as a high-level goal that contextualizes its decision-making process.

- It generates low-level actions—fine-tuned adjustments to steering, acceleration, and braking—as a direct response to the interplay between its goal and its real-time perception of the environment.

Shortage of Training Data

While large language models have been feasting on the vast banquet of the internet, a far hungrier class of AI is facing a famine. The development of capable humanoid robots—what we call embodied intelligence—is not constrained by processing power or algorithms, but by a profound data scarcity.

The challenge is that the data for physical intelligence is fundamentally different from the sprawling text and image corpora that fuel today's generative models. Robotic motion data is a high-dimensional stream of movement, force, and sensory feedback, all intricately dependent on context and time. Think less of a digital library, and more of an infinitely complex ballet that must be recorded and understood. This disparity creates the central bottleneck for progress in the world of atoms.

To grasp the sheer scale of this problem, consider the framework coined by UC Berkeley professor Ken Goldberg: the “100,000-year data gap.”

The upshot is sobering. Goldberg’s analysis suggests that at our current rate of data collection for robotic systems, achieving a dataset comparable in volume to what was used to train ChatGPT would take a hundred millennia. This data gap isn't just a technical hurdle; it is the core strategic challenge that will define the next decade of robotics and separates today's digital AI from the truly autonomous, general-purpose machines of the near future.

Several methods are being explored to generate the necessary data, each with distinct advantages and limitations:

| Approach | Description | Challenges / Limitations |

|---|---|---|

| Teleoperation | Human operators remotely control robots to generate high-fidelity motion data. Captures nuanced behaviors. Largest reported dataset is ~1 year of cumulative data. | Resource-intensive, difficult to scale. Extrapolation suggests ~100,000 years needed to match ChatGPT-scale data. |

| Simulation | Data generated within digital environments. Effective for training locomotion and flight control systems. | Notoriously unreliable for complex manipulation tasks due to the sim-to-real gap (behaviors learned in simulation often fail in real-world transfer). |

| Internet Video Analysis | Leverages vast video content (e.g., YouTube), estimated at ~35,000 years of footage. Potentially enormous data source. | Major obstacle: “lifting” problem—reconstructing precise 3D kinematics (hand, body, object) from 2D video. Expected to remain unsolved in the near term. |

| Real-World Commercial Robot Data | Uses data from robots already deployed in commercial settings (e.g., logistics, manufacturing, autonomous driving). Provides physically grounded, large-scale interaction data. | Limited to specific tasks and applications, but offers a pragmatic pathway to close the data gap for critical robotic use cases. |

Meet Your Future Coworkers

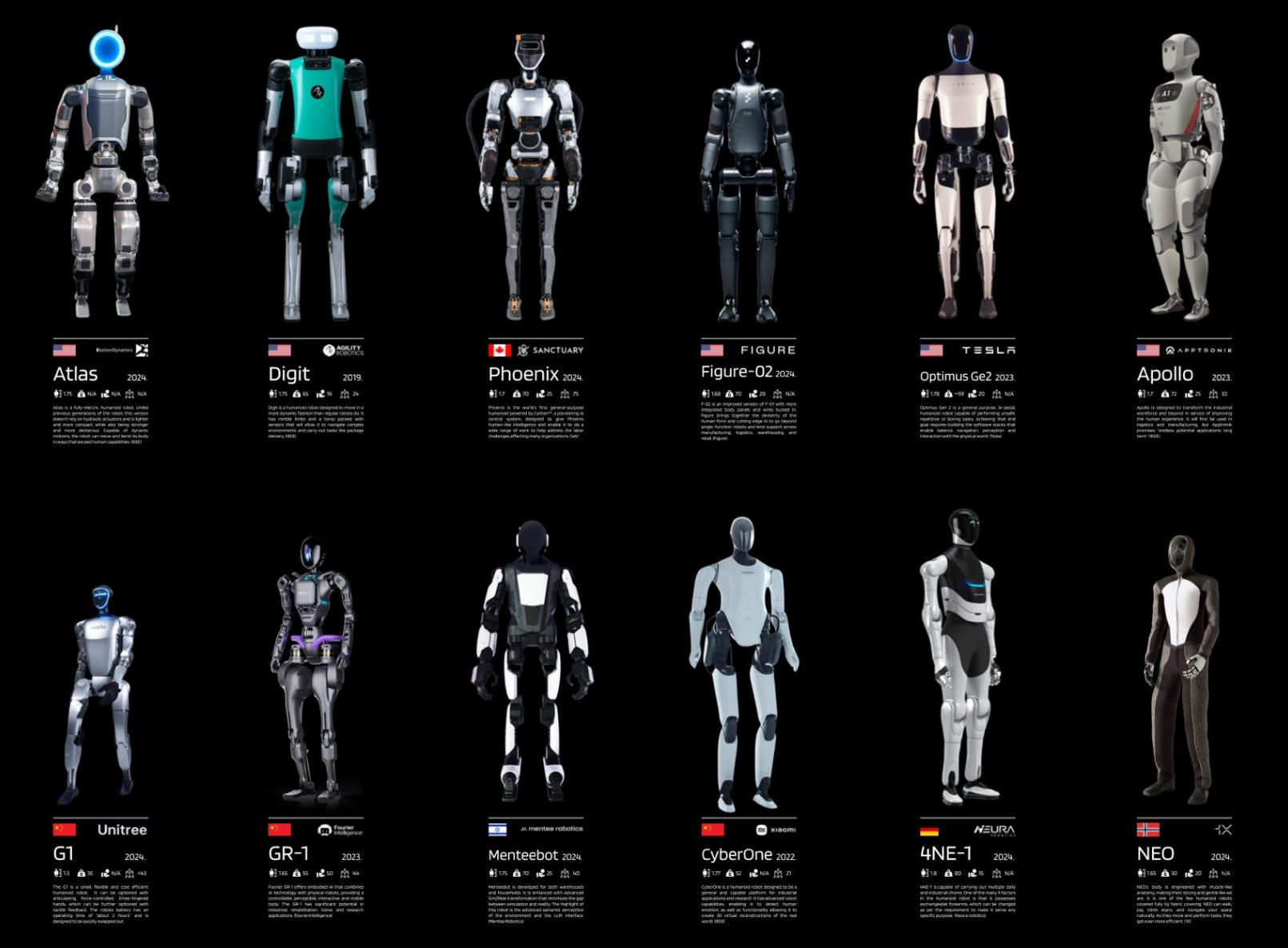

We will now take a look at the four different efforts to build a general humanoid robot in greater detail.

| Category | Key examples (2025) | Notable strengths |

|---|---|---|

| Humanoid robots | 1X NEO, Figure 02, Tesla Optimus, Boston Dynamics Atlas | Lifelike motion, AI planning, general-purpose tasks, better battery + computing |

| Industrial cobots and arms | RO1, ABB GoFa, FANUC CRX, KUKA LBR iisy, Yaskawa AR series | Sub-mm precision, safe collaboration, long reach, easy to program |

| Home or service robots | Amazon Astro, Agility Digit, Relay Robotics, Softbank Pepper | Voice control, autonomous navigation, real-world delivery, and interaction use |

1X NEO

1X Technologies is challenging the conventions of humanoid robotics with NEO, a bipedal android engineered from the ground up for safety, learning, and scalability. Moving beyond the heavy, industrial designs of its competitors, NEO’s lightweight 30 kg frame is built to integrate seamlessly and safely into human-centric spaces like retail stores and logistics centers.

Key Features and Innovations:

- Inherently Safe by Design: At just 30 kg (66 lbs) and 165 cm (5’ 5”), NEO is one of the lightest humanoid robots in development. Its design prioritizes safe co-working with people without sacrificing capability, including the ability to lift up to 20 kg.

- Embodied AI Learning: NEO’s intelligence is not hard-coded; it is learned. It operates on a single neural network that controls its movements from end to end. This AI is trained on vast datasets collected by 1X's wheeled EVE androids, allowing NEO to acquire skills dynamically.

- Natural Language Interaction: Equipped with a sophisticated vision-language model (VLM), NEO can comprehend and act on high-level voice commands, making it accessible to non-technical users and adaptable to changing operational needs.

- Dynamic Mobility: The NEO platform is capable of both stable walking (4 km/h) and running (12 km/h), giving it the speed and agility to navigate complex commercial environments efficiently.

With strong backing from investors including OpenAI, 1X is deploying NEO to offer a versatile robotic workforce that learns from and adapts to the real world.

Figure 02 by Figure AI

Figure 02 is a full-sized, general-purpose humanoid robot developed by Figure AI to operate alongside human workers in industrial environments.

Key Features and Capabilities:

- Human-like Dexterity: With a height of 1.7 meters and a weight of 70 kilograms, Figure 02 is built on a human scale. Each hand features 16 degrees of freedom, allowing it to handle tools and components with the precision required for complex manufacturing and assembly.

- Advanced AI Cognition: At its core, Figure 02 runs on a vision-language model developed with OpenAI. This enables the robot to perceive its environment, understand complex instructions from voice or visual prompts, and independently plan and execute tasks.

- Industrial-Grade Performance: Engineered for endurance, the robot is equipped with a 2.25 kWh battery that provides over 20 hours of operation on a single charge. Its onboard compute systems deliver triple the processing power of previous versions, ensuring rapid and reliable performance.

In the Field: Figure 02 is currently operational at BMW’s U.S. manufacturing facility, where it is tasked with assembly support and material transport. This partnership demonstrates the robot's ability to handle repetitive and physically demanding jobs, aiming to enhance productivity and improve worker safety.

This week, Figure has passed 5 months running on the BMW X3 body shop production line

— Brett Adcock (@adcock_brett) October 6, 2025

We have been running 10 hours per day, every single day of production!

It is believed that Figure and BMW are the first in the world to do this with humanoid robots pic.twitter.com/zAXCbApXBJ

Supported by over $675 million in funding from industry leaders like Microsoft and Nvidia at a $39 billion valuation. Figure AI is poised to scale production and deploy its humanoid robots across various sectors.

Figure recently announced BotQ a new high-volume manufacturing facility for humanoid robots, capable of manufacturing up to 12,000 humanoids per year.

Tesla Optimus

Tesla is actively developing Optimus, a humanoid robot designed for a wide range of applications, from manufacturing and logistics to personal assistance. Designed for efficiency and agility, Optimus stands 173 cm tall with a lightweight 57 kg frame and is equipped with advanced manipulators offering 11 degrees of freedom per hand.

Key Innovations and Features:

- Unified AI Architecture: Optimus is uniquely powered by the same Full Self-Driving (FSD) software stack used in Tesla vehicles. This integrated AI approach enables robust, vision-based navigation and task processing without the need for pre-programmed paths or external guidance.

- Engineered for Endurance: The robot incorporates a 2.3 kWh battery system, providing enough power to operate for a full workday, making it a viable solution for single-shift industrial applications.

- Designed for Scalability: With a projected price point under $20,000, Tesla aims to overcome the cost barriers that have historically limited the adoption of humanoid robots, paving the way for mass production.

In the Field: Having progressed from conceptual demonstrations—including walking and delicate object handling at recent AI Day events—Optimus is now being tested in live production environments. Internal trials at Tesla factories see the robot performing material handling tasks, autonomously navigating factory floors and executing pick-and-place jobs, showing its potential to augment human labor in repetitive workflows.

The company is grappling with another leadership departure in its Optimus division, raising concerns among investors about its future trajectory in humanoid robotics.

Boston Dynamics Atlas

Boston Dynamics has reimagined its iconic Atlas robot with the April 2024 unveiling of an all-electric model, purpose-built for industrial work. Replacing its legacy hydraulic systems, the new Atlas features a state-of-the-art electric actuation system that delivers unprecedented strength and a range of motion beyond human limits.

Key Features and Capabilities:

- Superhuman Performance: Engineered with stronger joints and superior flexibility, the electric Atlas is designed to handle tasks that are difficult or dangerous for human workers.

- Intelligent and Agile Motion: With embedded machine-learning controls, Atlas can react and adapt to its environment in real-time. It maintains its signature agility for dynamic, bipedal motion even in complex, confined spaces.

- Industrial-Grade Efficiency: The fully electric platform not only enhances performance but also offers greater energy efficiency, making it a viable solution for demanding manufacturing environments.

In the Field: The first real-world application for the new Atlas will be at Hyundai’s automotive factories. It will be integrated into the production line to support heavy lifting and assembly operations. This strategic partnership aims to leverage the robot's dynamic capabilities to increase efficiency and significantly reduce the risk of physical injury to the human workforce.

Conclusion

We are at a rare historical inflection point, where multiple monumental trends are converging to define a new economic paradigm. On one side are the powerful demand pressures: the move away from hyper-efficient global supply chains toward regional resilience is colliding with a demographic cliff—the "Silver Tsunami"—creating a structural vacuum in the labor market. On the other side is the technological response: the commoditization of robotic hardware and, most importantly, the exponential acceleration in artificial intelligence.

This is not a story of human replacement, but one of labor transformation. To understand the likely impact, we can look to the last wave of automation. Industrial robots did not end factory work; they redefined it, creating new, higher-value roles in programming, systems integration, and maintenance.

The coming wave of embodied AI will follow a similar path, but at a much larger scale. The upshot is an elevation of human work, shifting it away from repetitive physical tasks and toward roles centered on orchestration, oversight, and exception handling. The future of labor will be a partnership, with humans managing fleets of intelligent machines, defining their goals, and intervening when creativity and complex problem-solving are required.