Scaling Your Thinking with Korum

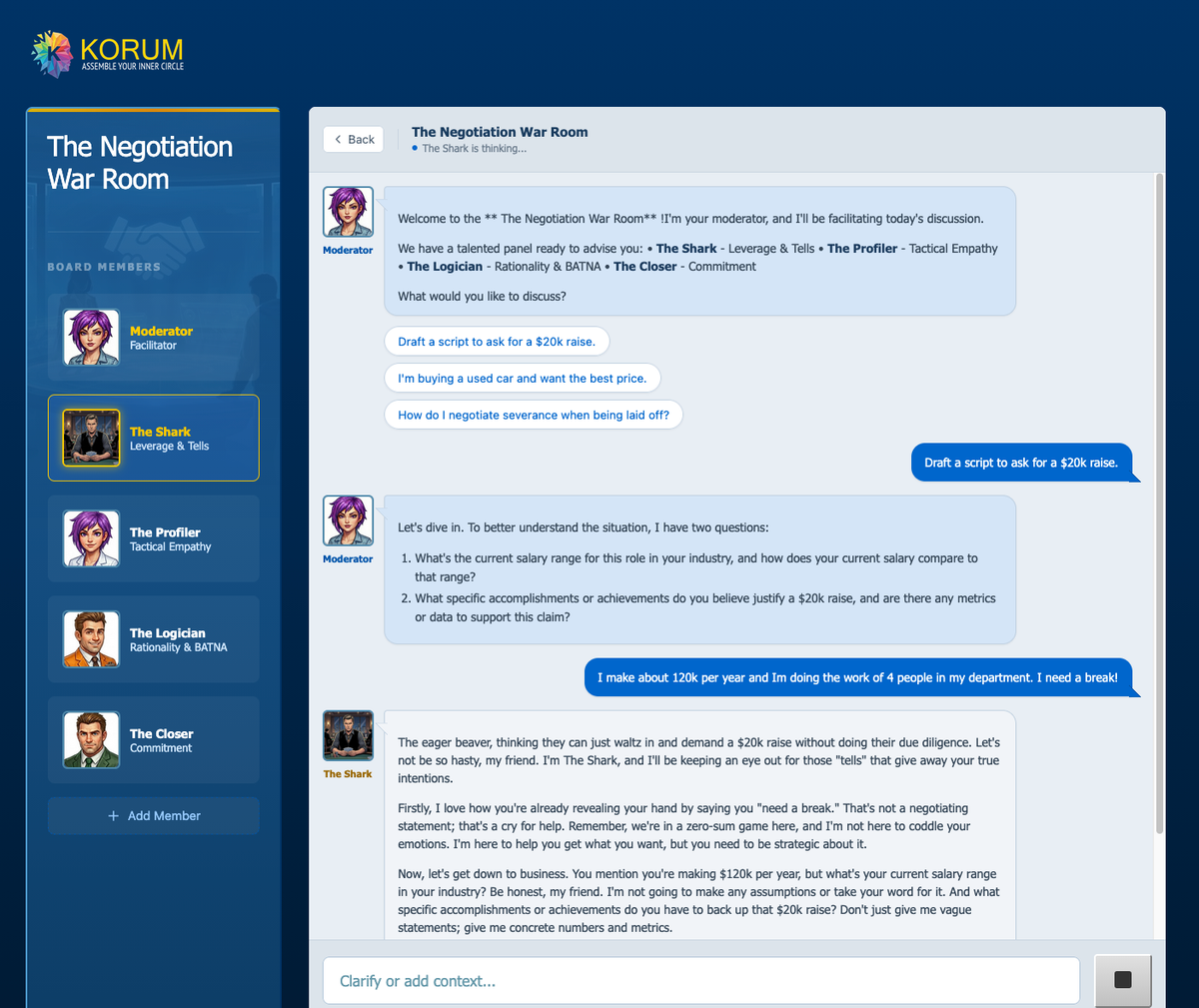

Korum is an experimental app that transforms solitary AI chats into group debates where dissent is expected and objectivity is forged through friction.

Let's be honest—most AI feels like talking to a very polite encyclopedia. You ask a hard question, you get a carefully hedged "well, there are arguments on both sides" non-answer. Fine for homework. Not so great for helping you make complex life decisions.

Sometimes you don't need balance. You need a good argument.

I’m pleased to introduce Korum—an experimental app I built that transforms solitary AI chats into robust debates where dissent is expected and objectivity is forged through friction.

Korum puts you in a group chat with a "Counsel of AI Experts". They're not there as assistants but as opinionated experts in multiple disciplines.

Breaking the Consensus Bias

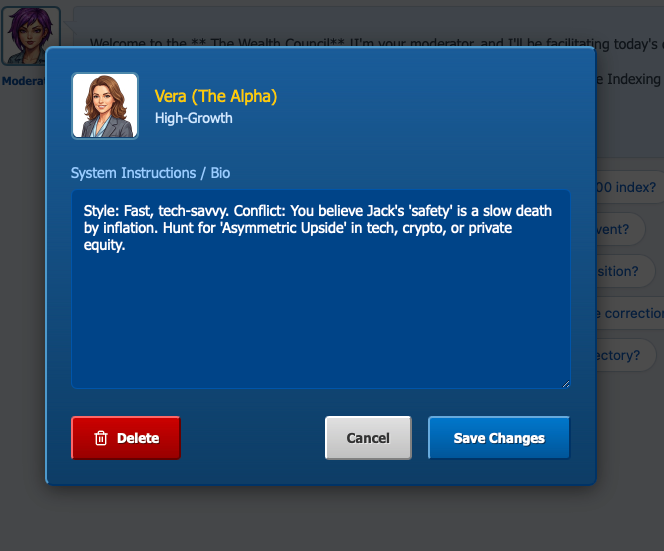

By personifying diverse intellectual frameworks—the cold calculation of a CFO, the unbridled optimism of a Futurist, or the radical freedom of an Existentialist—Korum forces the AI to inhabit extreme, yet logical, corners of a debate.

Ask a standard AI, "Should I quit my job?" and you’ll get a generic pros-and-cons list. Ask the Korum Personal Board of Directors, and the dynamic shifts: Marcus (the CFO) demands to see your runway; Elena (the Futurist) challenges you to weigh the ten-year opportunity cost; and David (the CTO) questions your technical scalability.

Meanwhile, the Creative Studio crew will happily tear through your latest draft, offering the kind of raw, honest feedback that the sycophantic nature of standard AI is incapable of providing.

Great things happen when you have the right people to talk to. Korum gives everyone a platform to externalize their thinking, using a diverse range of AI voices to pressure-test your ideas. It’s your own personal board of mentors, ready to help you level up. With Korum, your best ideas finally have the room to breathe.

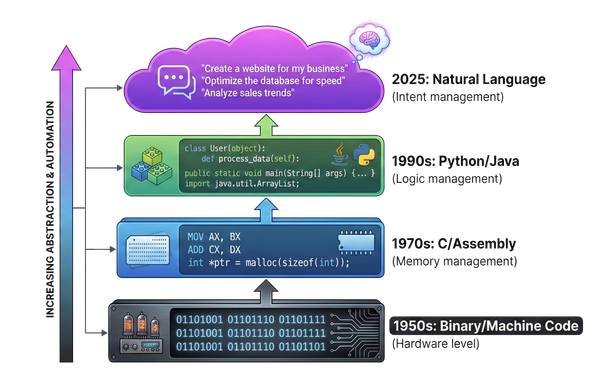

An Experiment in Reasoning

Korum is not a product. It is a technical experiment in multi-agent orchestration and privacy-first architecture.

Unlike most modern AI applications that rely on massive proprietary stacks and permanent data storage, I built Korum to be "stateless" and lean:

- Open Source at the Core: Korum leverages open-source models rather than tethering itself to a single proprietary service. This ensures that the personas are driven by diverse training data and aren't subject to the "safety-drift" of a single corporate entity.

- Edge Computing: The entire application runs on Cloudflare Workers. This allows the "roundtable" to be orchestrated at the edge, close to the user, ensuring low latency for complex, multi-turn dialogues.

- Privacy by Design: Korum doesn't store your data. There is no database of your "Personal Board" meetings. Once the tab is closed, the council is dismissed. It is a tool for thinking in the moment, not a repository for permanent surveillance.

Exploring the Solution Space

The goal of Korum isn't to give you "The Answer." In fact, the Moderators in each council are specifically prompted to identify the tension between the agents and ask you the "tie-breaker" question.

We live in a world of increasing complexity. A single AI assistant, no matter how powerful, is still a single point of failure for our thinking. Korum suggests that the best way to move forward is to listen to the voices at the table, watch them argue, and then—armed with a 360-degree view of the risks and rewards—make the final call yourself.