Reasoning Without Words

Chain of Continuous Thought approach solves complex problems faster and more efficiently than traditional, language based AI.

Thinking without language enables AI to navigate complex problem spaces.

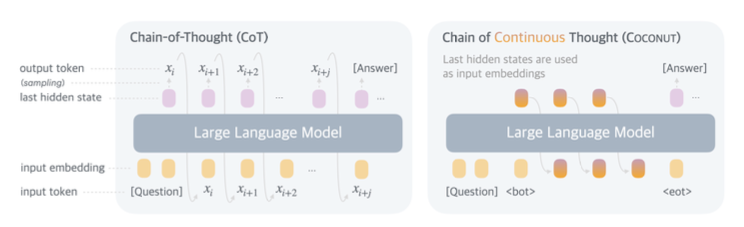

Current large language models rely on Chain of Thought reasoning for problem solving. This approach mimics human problem-solving by generating sequences of words, as though the AI were narrating its thought process to itself. However, this reliance on language has limitations. When humans solve complex puzzles, we don’t typically verbalize every step; instead, our reasoning often unfolds in non-verbal, intuitive ways. Neuroscience research supports this, showing that the language-processing parts of our brain are often inactive during complex reasoning tasks. This suggests that language may serve more as an explanatory tool, used after solutions are reached, rather than as a fundamental mechanism of thought.

A recent research paper titled Training Large Language Models to Reason in a

Continuous Latent Space introduces a novel approach to AI reasoning called Chain of Continuous Thought (COCONUT). Unlike traditional models that rely on generating words, COCONUT represents thoughts as complex patterns of activation within a neural network. These patterns flow continuously, unbroken by the constraints of language. In this latent space, AI operates more abstractly, representing concepts without reducing them to discrete words or sentences.

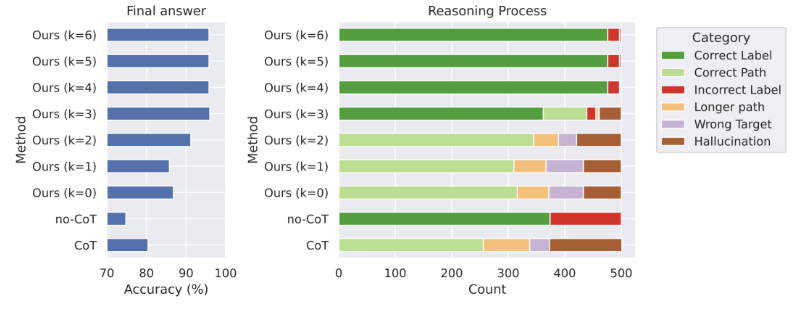

COCONUT was trained using examples of human problem-solving articulated through language. Gradually, the researchers removed this linguistic "scaffolding," encouraging the AI to rely more on its internal, continuous representations. This process allowed COCONUT to function independently of language, developing a reasoning style akin to a tree search—exploring multiple possibilities in parallel rather than following a linear chain of thoughts.

This latent space reasoning offers significant advantages. Linear thought through language forces the model to make concrete decision in each step, choose the wrong word, and go down a rabbit hole that misses the correct solution. In contract, COCONUT can maintain a broader range of possibilities until it identifies the most promising solutions. This approach reduces errors caused by premature decisions and allows the model to more fully navigate complex problem spaces.

The key innovation is the model's ability to preserve computational flexibility—essentially creating a dynamic, multi-dimensional problem-solving map where different routes can be explored, compared, and dynamically weighted without the computational overhead of fully executing each potential path.

Tested on datasets like GSM8K for math reasoning and ProntoQA for logical reasoning, COCONUT consistently delivered superior results to the traditional Chain of Thought approach.

The implication of this research is that latent space reasoning should be groundbreaking for science and engineering. Consider geometry, where finding solutions often relies on abstract, non-linguistic thought—visualizing shapes, patterns, and relationships rather than verbalizing them. Or agentic systems, where multiple AI agents collaborate to perform tasks. Currently, these systems rely on language as their primary medium for interim messaging, significantly limiting the bandwidth at which information can be exchanged. By moving past the bottleneck of linguistic constraints, AI systems could more readily reason and communicate about complex concepts.

However, this new approach also raises challenges, particularly in making AI’s reasoning processes transparent. If AI operates in a hidden, continuous space, how can we trust its decisions? Researchers are actively working on visualization techniques to interpret these latent thoughts, offering insights into how the AI navigates problem spaces. Such transparency could foster not only trust but also a deeper understanding of problem-solving itself.

This research also challenges us to reconsider the role of language in intelligence. For centuries, language has been seen as the cornerstone of human cognition, the tool that distinguishes us from other species. But if AI can reason effectively without language, it suggests that intelligence may be a more fundamental capability, existing independently of words and grammar.

The advent of latent space reasoning marks a paradigm shift in our understanding of intelligence—both artificial and human. It challenges us to rethink the very foundations of thought and communication, opening the door to a future where the boundaries between human intuition and machine reasoning blur, and where the connections we make matter more than the words we use to express them.