This Week in AI Research: Object Detection and Cutting-Edge Audio Analysis

This post looks at state of-the-art AI models for real-time object detection and advanced audio processing.

2/10/2024

Every day seems to bring a new wave of AI research. To cut through the noise, I curate a selection of works that I find interesting each week. My aim is to cover promising research before it's commercialized, giving my readers a sneak preview of the future.

This week's topics are:

- 🔎 Real-Time Object Detection: YOLO-World

- 🎧 Beyond Words: Audio Flamingo

🔎 Real-Time Object Detection: YOLO-World

- Significantly faster than any prior open-vocabulary approaches

- Does not require training

- Robotics applications

YOLO-World is a remarkable leap forward in high efficiency object detection. This approach excels at zero-shot classification of wide range of objects while maintaining a high level of accuracy and speed. In fact, it outperforms nearly all existing open-vocabulary detection methods.

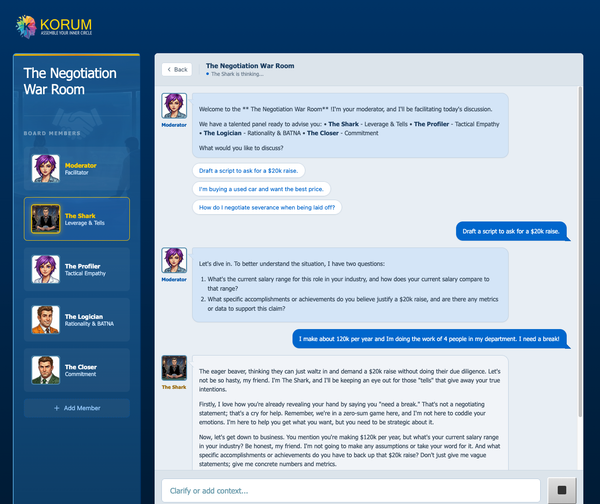

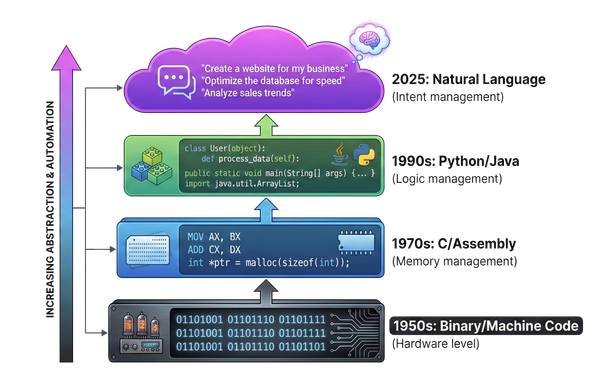

The You Only Look Once (YOLO) framework was originally introduced in 2015. It was considered groundbreaking at its time, because it classified objects and predicted their boundaries within a single evaluation step. While fast, it was generally limited to a predefined category of objects that the model was trained on (for example: roads).

YOLO-World takes an alternate approach: training using large-scale, multi-domain datasets, and with a new method for linking visual and linguistic representations called Re-parameterizable Vision-Language Path Aggregation

Network (RepVL-PAN). This method improves baseline detection, but it really shines at navigating the complexities of edge cases, where traditional detection methods falter. Like most models, training on large volumes of data leads to higher degree of emergent behavior to generalize and abstract, improving zero-shot performance. In testing, YOLO-World claims to outperform existing open-vocabulary models in accuracy and speed by up to 20x.

In this example, YOLO-World is processing a video in real-time at 52fps on a desktop GPU:

REAL-TIME object detection WITHOUT TRAINING

— SkalskiP (@skalskip92) February 6, 2024

YOLO-World is a new SOTA open-vocabulary object detector that outperforms previous models in terms of both accuracy and speed. 35.4 AP with 52.0 FPS on V100.

↓ read more pic.twitter.com/SoqFyEk41V

Practical applications for real-time object recognition are numerous, such as in warehousing, surveillance, and medicine. But perhaps one field stands to gain the most from an effective real-time vision/cognition model and that's robotics.

Multi-modal AI can finally bring together perception, cognition and action, solving the thorny problem of handling complex, dynamic environments.

The old approach to robotics brute-forced perception. It fused sensor data and attempted to continuously compare reality with the internal model of the world that is driving the robot. This rigid framework works fine in industrial settings, where the environment is static, but it cannot cope with the messiness of the real world.

Tesla has recently completely revamped its approach to self-driving with V12.0 of FSD, ditching the old brute-force method for an end-to-end AI model that takes raw pixel data from the cameras and outputs steering, braking and acceleration commands.

I believe we are at the dawn of a new era in robotics, and AI enabled vision technology is a key enabler behind the upcoming wave. I will be exploring this topic more in future posts.

🧪 Demo: https://huggingface.co/spaces/stevengrove/YOLO-World

📰 Post: https://twitter.com/skalskip92/status/1754916531622854690

📄 Paper: https://arxiv.org/abs/2401.17270

💾 Code: https://github.com/AILab-CVC/YOLO-World

🎧 Beyond Words: Audio Flamingo

- Understands non-verbal sounds

- Quickly adapts to new tasks

Audio Flamingo is a new audio model that goes beyond transcription and embraces the vast and vibrant acoustic spectrum to include non-speech sounds and music under a unified, multi-modal framework that remains rooted in an instructional LLM.

How many different voices are there?

"Three"

"A bus engine driving in the distance then nearby followed by compressed air releasing while a woman and a child talk in the distance"

Can you tell me more about the vocals in this track?

"The vocals in this track are by a male singer. His voice is deep, resonant, and powerful, adding to the overall emotional impact of the song."

This model is unique in several important ways:

- Understands meaning from the blend of speech, intonation, tonality and non-verbal sounds. While traditional audio models struggle with nuance, AF seems capable of picking up subtext and inferring sentiment across verbal and non-verbal queues.

- In-context learning (ICL) and retrieval augmented generation (RAG) to quickly adapt to new tasks, showing few-shot learning potential.

- Robust multi-turn dialogue capability, able to maintain contextually coherent conversations with the user.

- A sliding window feature extractor to better capture temporal information in long recordings. This is in contrast with other audio models that compute a single embedding for a audio, losing temporal information.

Audio Flamingo is still at a nascent stage of development, yet it offers a fascinating preview into the world of subtler modalities. The model seems to do a good job generalizing across verbal and non-verbal queues, and it would be fascinating to see it incorporate a visual modality with time.

For me, it is perfect representation of the exciting potential of what's to come in AI.

📰 Post/Demo: https://audioflamingo.github.io/

📄 Paper: https://arxiv.org/abs/2402.01831

💾 Code: https://github.com/kyegomez/AudioFlamingo