AI Agents: The Future of Software

The economic impact of AI agents will be transformative. By automating complex tasks, enhancing productivity, and enabling new business models, AI agents have the potential to create a new wave of technological innovation and reshape the global economy.

AI agents have the potential to transform software development. In the near future, these agents could autonomously design and construct entire computer systems, converting requirements into fully functional applications without human coding. By embedding AI at the heart of software systems, we could create self-healing architectures capable of dynamically rerouting processes around failures and continuously optimizing for performance and security. AI agents may even foresee user needs and proactively enhance functionality.

The potential of AI in software extends beyond mere automation; it represents a fundamental shift in how we conceive, create, and interact with digital systems, potentially ushering in an era of unprecedented technological progress.

The economic impact of AI agents is set to be transformative, driving significant shifts across various industries, reshaping dynamics of the global economy.

The Evolution of Automation

| Tasks | Flexibility | Autonomy | |

|---|---|---|---|

| Scripting | Simple | High | None |

| RPA | Medium | Medim | None |

| APIs | Complex | Low | None |

| AI Agents | Complex | High | High |

To understand the significance of AI agents, let's examine how they differ from existing automation technologies:

- Scripting: The most basic form of automation, like VBA macros in Excel, automates simple tasks within a single application.

- Robotic Process Automation (RPA): More advanced than scripting, RPA connects several applications. It's quick to implement but can be brittle when applications change.

- APIs/Orchestrations: These robust and scalable systems connect services and APIs but are labor-intensive to develop and maintain.

- AI Agents: These have the potential to surpass all previous techniques, offering the ability to solve complex tasks with flexibility and autonomy.

Defining an AI Agent

An autonomous AI agent is a self-directed system that perceives its environment, makes independent decisions, and takes actions to achieve specific goals without constant human oversight. It can adapt to new situations and improve its performance over time through learning and experience.

There are several key differences between autonomous AI agents and current large language models (LLMs):

- Goal-directed behavior: Autonomous agents are designed to pursue specific objectives or goals independently. LLMs are reactive - they respond to prompts but don't have our own goals or agenda.

- Environmental interaction: Autonomous agents can directly interact with and manipulate their environment. LLMs are limited to processing and generating text without direct environmental interaction.

- Decision-making: Agents make decisions about what actions to take to achieve their goals. LLMs generate responses based on prompts but don't make real-world decisions.

- Continuous operation: Agents can operate continuously over time, learning and adapting. LLMs operate in discrete conversation sessions.

- Learning and adaptation: Agents typically have mechanisms to learn from experience and adapt their behavior over time. Current LLMs have fixed knowledge from training.

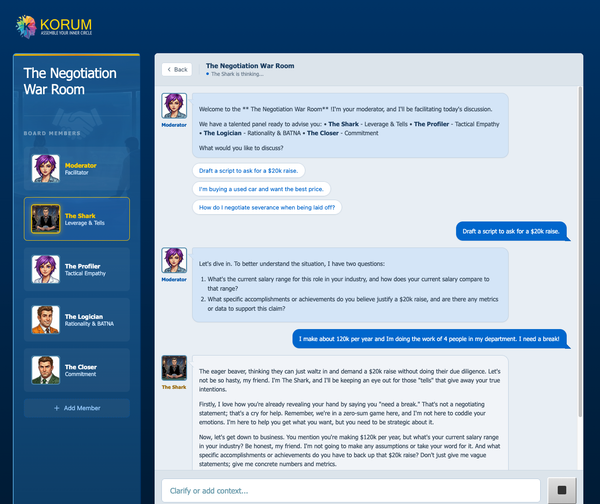

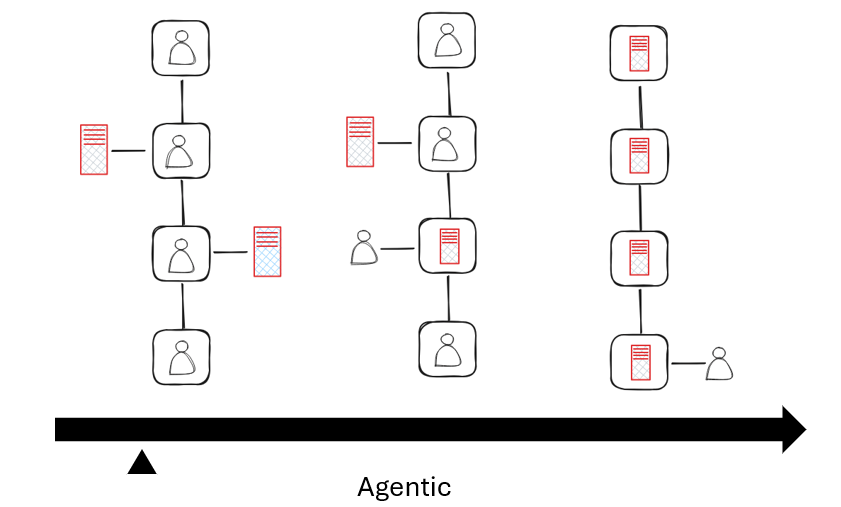

It is helpful to think about autonomy as a scale. Starting from the left you have workflows that are completely manual, with some AI assistance along the way (like using Copilot to draft your emails). This is where we are now. Next are hybrid workflows where a step or two is replaced by an AI automation, but the overall process is still manually managed. Finally, on the right are completely agentic workflows in which AI performs every single step, and the human merely monitors.

So how do we get to agentic systems on the right of this picture?

Large Language Models Are Not Enough

It is helpful to frame a possible solution in the context of current large language models (LLMs) like GPT-4.

While these models excel at tasks such as document summarization and email drafting, they fall short when it comes to complex problem-solving. LLMs alone are not able to plan or reason, in fact, an LLM only “thinks” about the next token it will produce – it doesn’t even know how it will end a sentence. To elevate AI's problem-solving capabilities, we need to develop systems that can plan and break down complex issues into smaller tasks.

Moreover, LLMs face two other significant limitations. First, they cannot learn or update their knowledge in real-time, as their neural structures remain static after training. Their knowledge is frozen at a specific point in time, leaving them unable to incorporate new information. Second, LLMs are prone to hallucinations - generating inaccurate or unexpected outputs. For AI to take on mission-critical tasks, we must design architectures that are both resilient to these errors and capable of self-correction.

Mitigating LLM Limitations

There has been some promising work in addressing these issues, starting with compound systems, such as what you’ll find in the new iteration of Google Gemini, ChatGPT or Bing, where the model will search the web for an answer, then feed it back to itself to output to the user.

Another helpful technique is called a mixture of agents, where multiple language models work to produce an answer in a series of steps. This approach actually has been shown to exceed GPT4 in certain benchmarks (Mixture-of-Agents Enhances Large Language Model Capabilities).

But to build a truly agentic system, we need to look at alternative cognitive architectures. A good place to start could be the human brain…

Human Brain

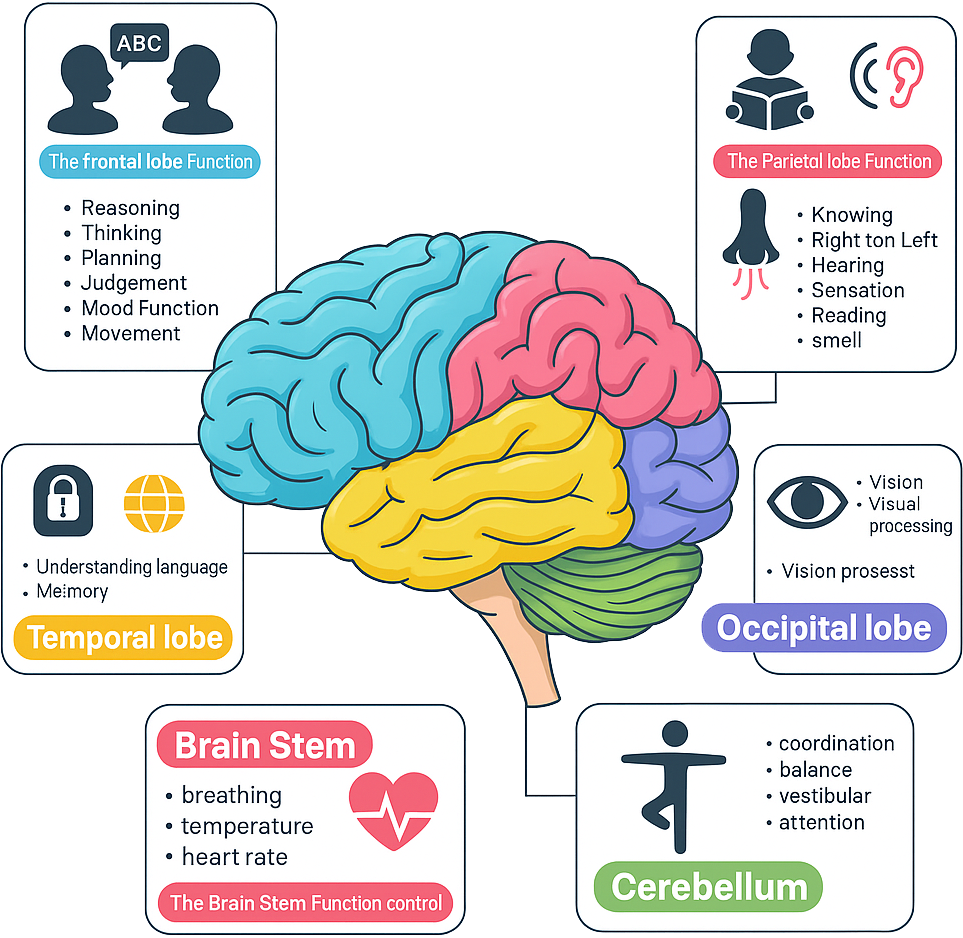

The human brain is a highly intricate organ with specialized regions, each performing distinct yet interconnected functions. These regions work together to enable complex behaviors, thoughts, and actions.

Of particular interest to us is the Frontal Lobe, a region dedicated to planning and reasoning. This area is responsible for complex cognitive functions such as decision-making, problem-solving, and controlling behavior and emotions. It plays a crucial role in our ability to plan for the future, think abstractly, and exercise judgment. Memories, are primarily stored in the Temporal Lobe. Vision, senses and interface to our body are spread across additional regions.

Perhaps by copying the idea specialization and inter-regional communication, we can try to mimic human cognitive processes with LLMs...

Designing an AI Agent

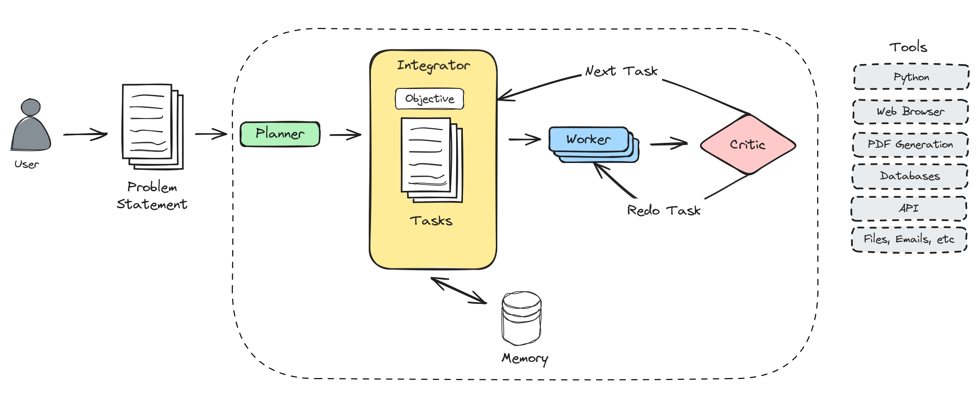

Most AI agent systems rely on an architecture vaguely resembling the brain, where multiple specialized AI agents work together to achieve a goal. This collaborative approach mimics human cognitive processes, and attempts to compensate for the limitations of LLMs.

The process begins with the Planner Agent, which handles high-level planning, breaks down tasks, prioritizes, and considers various limitations. It's comparable to the brain's Frontal Lobe. This Planner Agent outlines the tasks necessary to achieve the objective and passes them to the Integrator Agent.

The Integrator Agent oversees task management and maintains a holistic view of both the problem and its solution. The Integrator hands the tasks over to Worker Agents that can use tools like coding, web searching, or database querying to complete their work. It is important to note that a Worker Agent might not always complete its assignment correctly, potentially requiring a different approach. For this reason, there is a Critic Agent that reviews results and either approves them or provides feedback to the Worker Agent on how to adjust its method for better outcomes. This process resembles your inner monologue. Lastly, each Worker Agent's output and notes are stored in a working memory, analogous to the brain's Temporal Lobe.

Key defining features of this multi-agent approach are:

- Autonomy: Agents make independent decisions.

- Interaction: Agents communicate and collaborate.

- Goal-orientation: Each agent pursues specific objectives.

Advantages over single LLM instances or copilots:

- Dynamic reasoning across various paths

- Ability to handle complex, large-scale problems

- Enhanced memory capabilities, overcoming LLM context limitations

Current State of the Art

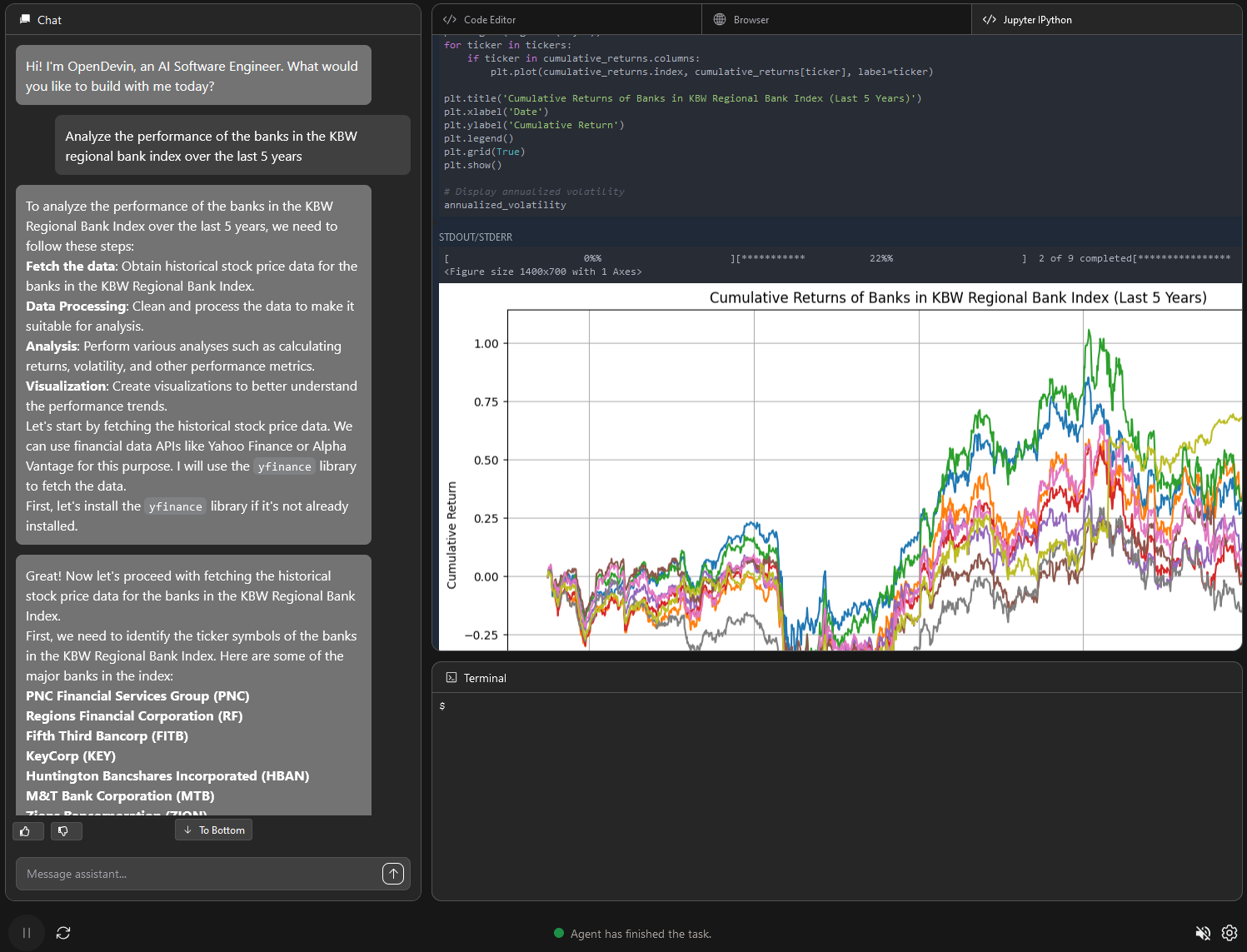

Current state-of-the-art agents are sometimes able to achieve surprising and impressive results. Below is an example of Open Interpreter controlling a desktop and using the camera to connect to a WiFi hotspot.

Give 'vision' capability to all of your local LLMs using the power of Open Interpreter tool🤯

— Rohan Paul (@rohanpaul_ai) June 20, 2024

Checkout this video by @hellokillian - Really 'WOW' example.

Open Interpreter is a fully open-sourced tool, that lets LLMs run code locally (Python, Javascript, Shell, and more) is… pic.twitter.com/8vuPgUShYU

However, more often then not, current generation of AI agents fail to complete tasks and generally produce unreliable results. Part of the reason for this is that LLMs are non-deterministic – so they may not take the same approach every time. Furthermore, although LLMs have made significant progress in logic and planning, they simply may not make the best decisions.

I believe the most likely area where AI agents will find near-term traction is in writing progressively larger chunks of computer code. Think of this as a very advanced version of GitHub Copilot that is capable of testing and refining code autonomously. This approach has the added benefit of generating deterministic, verifiable code that aligns with existing governance and security models.

Future Outlook

The future of AI agents holds immense promise, but we're still in the nascent stages of realizing their potential. The landscape is dotted with numerous autonomous agent projects, however, none have yet reached a level of maturity that makes them viable for real use. While these early efforts demonstrate the foundational technologies and offer glimpses of what’s possible, they grapple with reliability and overall capability. As research progresses and technology evolves, we can anticipate incremental progress that will start to fill these gaps.

The economic incentives for developing AI agents are enormous, promising substantial returns for those who invest in this transformative technology. As AI agents become more advanced and integrated into various sectors, they offer unprecedented opportunities for cost reduction and efficiency gains.

Appendix: AI Agent Projects